What is Raytracing?

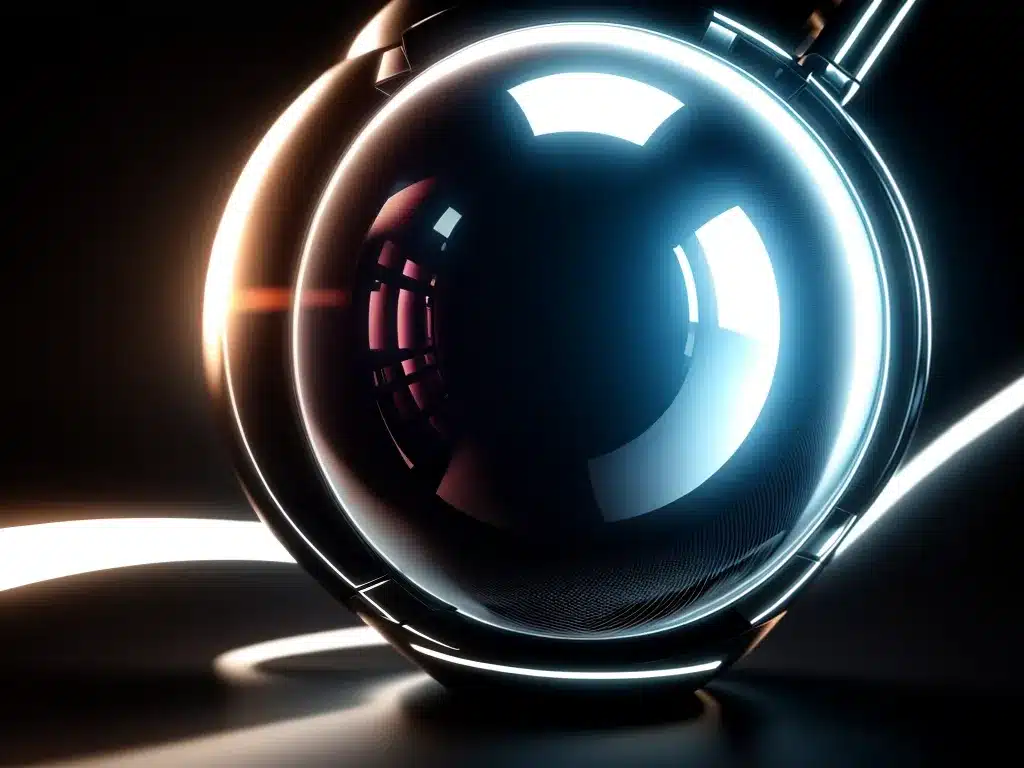

Raytracing is a graphics rendering technique that produces realistic lighting effects in 3D computer generated imagery. It simulates the physical behavior of light rays interacting with objects in a 3D scene.

In raytracing, rays are cast from the camera into the 3D scene. When a ray hits a surface, it can produce different lighting effects:

- Direct illumination – The surface is lit directly by a light source

- Diffuse reflection – The ray scatters in different directions to simulate a matte, non-shiny surface

- Specular reflection – The ray bounces off at an angle to simulate a glossy surface

- Refraction – The ray bends as it passes through transparent objects

- Shadows – Rays are blocked by objects, creating shadows

- Global illumination – Indirect lighting from light bouncing off surfaces fills the scene realistically

By accurately simulating light propagation, raytracing can render photorealistic scenes with effects like reflections, refractions, and shadows. This level of visual quality has not been possible with previous real-time rendering techniques.

Brief History of Raytracing

The foundations of raytracing were established in 1968 by Arthur Appel, who described the basic algorithm. In 1979, Turner Whitted introduced several optimizations and additional effects like reflections and refraction.

The first notable use of raytracing was in 1982’s movie Tron, which included 15 minutes of computer generated scenes. In 1986, Pixar’s short film Luxo Jr demonstrated realistic lighting with raytracing.

However, rendering times were extremely long. Progressive refinement techniques made raytracing practical by gradually improving the image quality. By the 2000s, raytracing was widely adopted for film CGI and 3D rendering.

The huge computational requirements made real-time raytracing impossible. But the availability of massively parallel GPU hardware and optimized algorithms have now enabled its use in real-time applications like games.

Why Raytracing for Real-Time Graphics?

Previous real-time techniques like rasterization have relied on “tricks” to approximate lighting effects like shadows and reflections. But raytracing directly simulates light propagation to capture the nuances of complex lighting accurately.

Some key advantages of real-time raytracing:

- Photorealistic material representation with accurate reflections, refractions, etc

- Natural looking lighting and shadows from any light source

- Realistic global illumination from light bouncing off surfaces

- Easier content creation as behaviors emerge from the physically based simulation

- More lifelike animations as lighting responds naturally to all motion

This leap in visual quality is why raytracing is seen as the next evolution in real-time graphics, especially for immersive media like games and VR.

How GPU Raytracing Works

Dedicated raytracing hardware like Nvidia’s RT Cores accelerate specific calculations needed for ray-surface intersection tests. The GPU runs thousands of rays in parallel across many stream processors.

Key optimizations that have enabled real-time performance:

- Bounding volume hierarchies – The scene is structured as nested volumes to minimize ray intersection tests

- Hybrid rendering – Only some effects like shadows and reflections are raytraced, others use traditional rasterization

- Variable rate shading – Rays are cast at variable densities across the image to optimize quality and performance

- AI denoising – Deep learning algorithms remove noise from the raytraced image

These techniques allow the GPU to raytrace complex scenes at over 60 FPS frame rates. The performance will keep improving with hardware and software advancements.

Raytracing in Games

Real-time raytracing was first made available in 2018’s games like Battlefield V and Metro Exodus. Screen space effects like reflections and shadows were raytraced for enhanced realism.

In 2019, Control showed full global illumination from raytracing. In 2020, Watch Dogs Legion combined raytraced reflections, shadows, and global illumination for extremely lifelike city environments.

As the hardware and ecosystem matures, more games will leverage raytracing. It will become the norm for lighting, shadows, reflections, and other effects. Photoreal CG movies may also transition from offline rendering to real-time raytracing.

The Future of Real-Time Raytracing

Raytracing sits at the intersection of advances in physical simulation, parallel computing, and AI. It heralds a new level of realism in real-time 3D graphics.

Nvidia predicts raytracing will achieve full feature film quality in the near future. Ongoing hardware and software developments will unlock the full potential of this revolutionary rendering technique.

The next decade will see raytracing integrated into content creation tools and gaming engines. It will become an integral part of the 3D graphics pipeline, ubiquitous across different platforms and applications.

The natural and accurate lighting behaviors from raytracing can open new creative possibilities. It promises to be transformational for both content creators and consumers of real-time 3D experiences and usher in a new era in computer graphics.