The Power of Computational Imaging: Unlocking the Potential of Label-Free Microscopy

As a seasoned IT professional, I’m excited to delve into the fascinating world of computational imaging and its transformative impact on biological research. In this article, we’ll explore the groundbreaking work presented in the bioRxiv preprint “Robust Virtual Staining of Landmark Organelles,” which outlines a novel approach to overcoming the limitations of traditional fluorescent labeling techniques.

In the realm of cell biology and systems research, the ability to dynamically image and monitor the behavior of key cellular structures, such as nuclei, cell membranes, and organelles, is paramount. Traditionally, this has been achieved through the laborious process of fluorescent staining, which can be time-consuming, labor-intensive, and potentially disruptive to cell health. However, the advent of deep learning-powered virtual staining has opened up new avenues for researchers to unlock the full potential of label-free microscopy.

The Challenges of Traditional Fluorescent Staining

Fluorescent imaging has long been the workhorse of cell biology, allowing researchers to visualize and track the spatial and temporal dynamics of various cellular components. However, this approach is not without its limitations. The process of labeling cells with fluorescent probes can be complex, time-consuming, and often requires specialized expertise. Additionally, the introduction of exogenous labels can potentially alter the natural behavior of cells, introducing unwanted artifacts and compromising the integrity of the experimental data.

Furthermore, fluorescent labels can be susceptible to photobleaching, a phenomenon where the fluorescent molecules gradually lose their ability to emit light over time. This can lead to a progressive loss of signal, making it challenging to capture long-term or repeated observations of the same sample.

Unlocking the Potential of Label-Free Microscopy

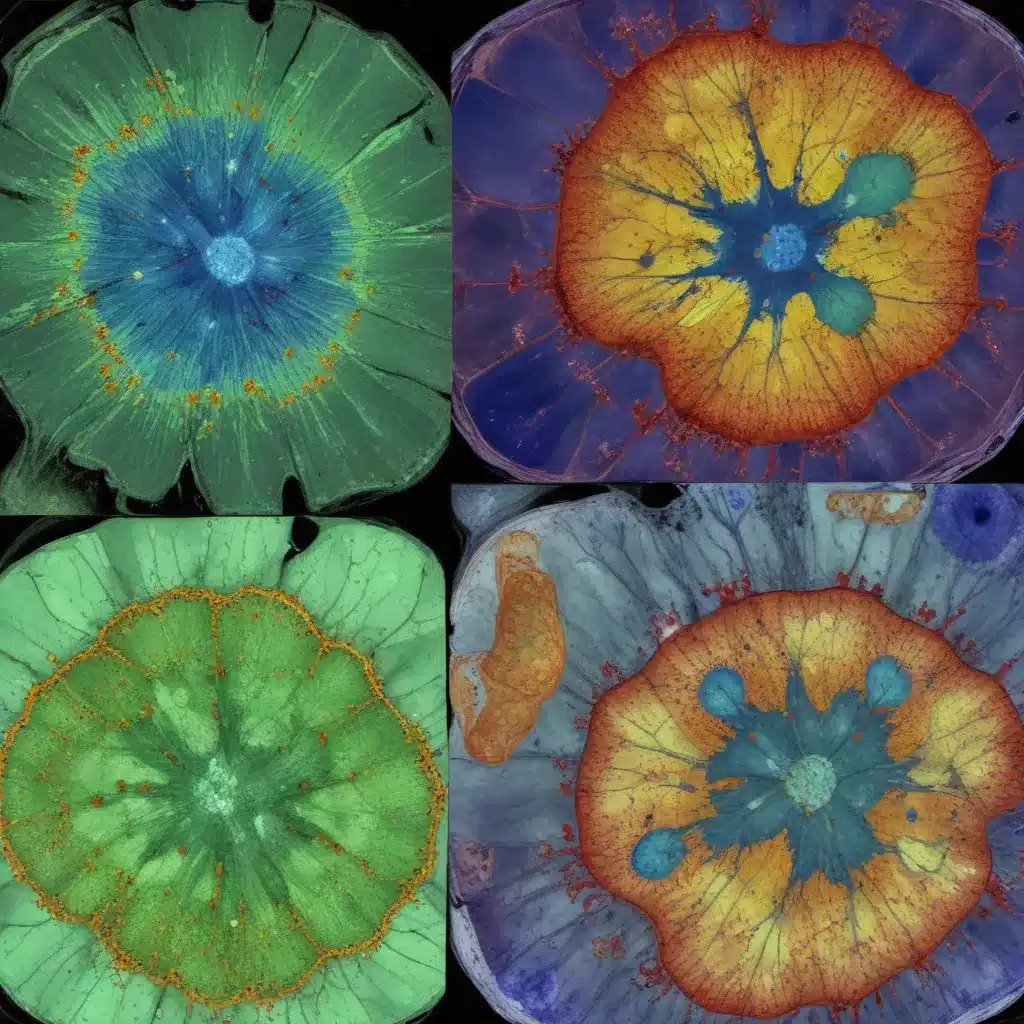

Enter the exciting world of virtual staining, a novel computational approach that leverages deep neural networks to generate virtual representations of cellular landmarks from label-free microscopy data. By demultiplexing the scattered light signals captured by the microscope, virtual staining techniques can reconstruct high-quality images of key organelles, such as nuclei and cell membranes, without the need for invasive fluorescent labeling.

The benefits of this approach are manifold. First and foremost, virtual staining eliminates the need for labor-intensive manual labeling, significantly increasing the throughput and scalability of biological imaging experiments. Additionally, by preserving the native light spectrum, virtual staining opens up new possibilities for multimodal imaging, allowing researchers to simultaneously capture additional molecular reporters, perform photomanipulation, or explore other advanced imaging techniques.

Robust Virtual Staining: Overcoming Challenges

The preprint “Robust Virtual Staining of Landmark Organelles” by the Mehta Lab at the University of California, Berkeley, tackles a crucial challenge in the field of virtual staining: the fragility of existing approaches in the face of nuisance variations.

Nuisance variations refer to the unwanted factors that can introduce noise or distortion into the microscopy data, such as differences in imaging parameters, cell culture conditions, or cell types. These variations can significantly degrade the performance of virtual staining models, leading to inaccurate predictions and compromising the reliability of the results.

To address this challenge, the researchers developed a flexible and scalable convolutional architecture called UNeXt2, which is capable of supervised training and self-supervised pre-training. This novel approach enables robust virtual staining of nuclei and cell membranes across a wide range of cell types and imaging conditions, including human cell lines, zebrafish neuromasts, and stem cell-derived neurons.

Key Highlights and Innovations

The preprint presents several key innovations and highlights that demonstrate the power and versatility of this virtual staining approach:

-

Robust to Variations: The developed models are capable of producing high-quality virtual stains of nuclei and cell membranes, even in the presence of nuisance variations in imaging parameters, cell states, and cell types.

-

Multitasking Capabilities: The virtual staining models can simultaneously reconstruct multiple cellular landmarks, such as nuclei and cell membranes, from a single label-free microscopy input, enabling correlative dynamic imaging.

-

Preserving Light Spectrum: By demultiplexing the scattered light signals, the virtual staining approach leaves the light spectrum available for imaging additional molecular reporters, photomanipulation, or other advanced imaging techniques.

-

Overcoming Limitations: The models are shown to rescue missing labels, non-uniform expression of labels, and photobleaching, common challenges encountered with traditional fluorescent staining methods.

-

Scalable and Flexible Architecture: The UNeXt2 architecture offers a flexible and scalable solution for virtual staining, supporting both supervised training and self-supervised pre-training to enhance the models’ robustness and generalizability.

-

Comprehensive Evaluation: The authors rigorously assess the performance of the virtual staining models by comparing the intensity, segmentations, and application-specific measurements obtained from virtually stained and experimentally stained nuclei and cell membranes.

-

Open-Source Resources: The researchers have shared pre-trained models (VSCyto3D, VSNeuromast, and VSCyto2D) and a PyTorch-based pipeline (VisCy) for training, inference, and deployment, leveraging the OME-Zarr format, a community standard for image data and metadata.

Practical Applications and Future Directions

The robust virtual staining approach presented in this preprint has far-reaching implications for the field of biological imaging and cell biology research. By overcoming the limitations of traditional fluorescent labeling techniques, this technology can significantly streamline experimental workflows, increase throughput, and enable more comprehensive and unperturbed observations of cellular dynamics.

Some practical applications of this virtual staining technology include:

-

High-Throughput Phenotyping: The ability to rapidly and accurately image cellular landmarks across various cell types and conditions can greatly enhance image-based phenotyping for drug discovery, disease modeling, and systems biology.

-

Correlative Imaging: The simultaneous virtual staining of multiple cellular structures can enable the integration of diverse imaging modalities, providing a more holistic understanding of cellular function and organization.

-

Long-Term Tracking: The virtual staining approach’s resistance to photobleaching and preservation of the light spectrum can facilitate long-term, time-lapse imaging studies, revealing previously inaccessible insights into cellular processes.

-

Multimodal Imaging: By freeing up the light spectrum, virtual staining can pave the way for the integration of additional molecular reporters, optogenetic manipulations, or other advanced imaging techniques, further expanding the experimental capabilities of researchers.

As the field of computational imaging continues to evolve, the robust virtual staining techniques presented in this preprint represent a significant step forward in unlocking the full potential of label-free microscopy. By empowering researchers with a reliable, versatile, and scalable solution for imaging cellular landmarks, this technology has the potential to transform the way we approach cell biology research and drive new discoveries in the years to come.

For more information on the latest advancements in IT solutions and computer repair, be sure to visit https://itfix.org.uk/networking-support/. Our team of seasoned professionals is dedicated to providing practical tips and in-depth insights to help you stay ahead of the curve in the ever-evolving world of technology.