What is Reinforcement Learning?

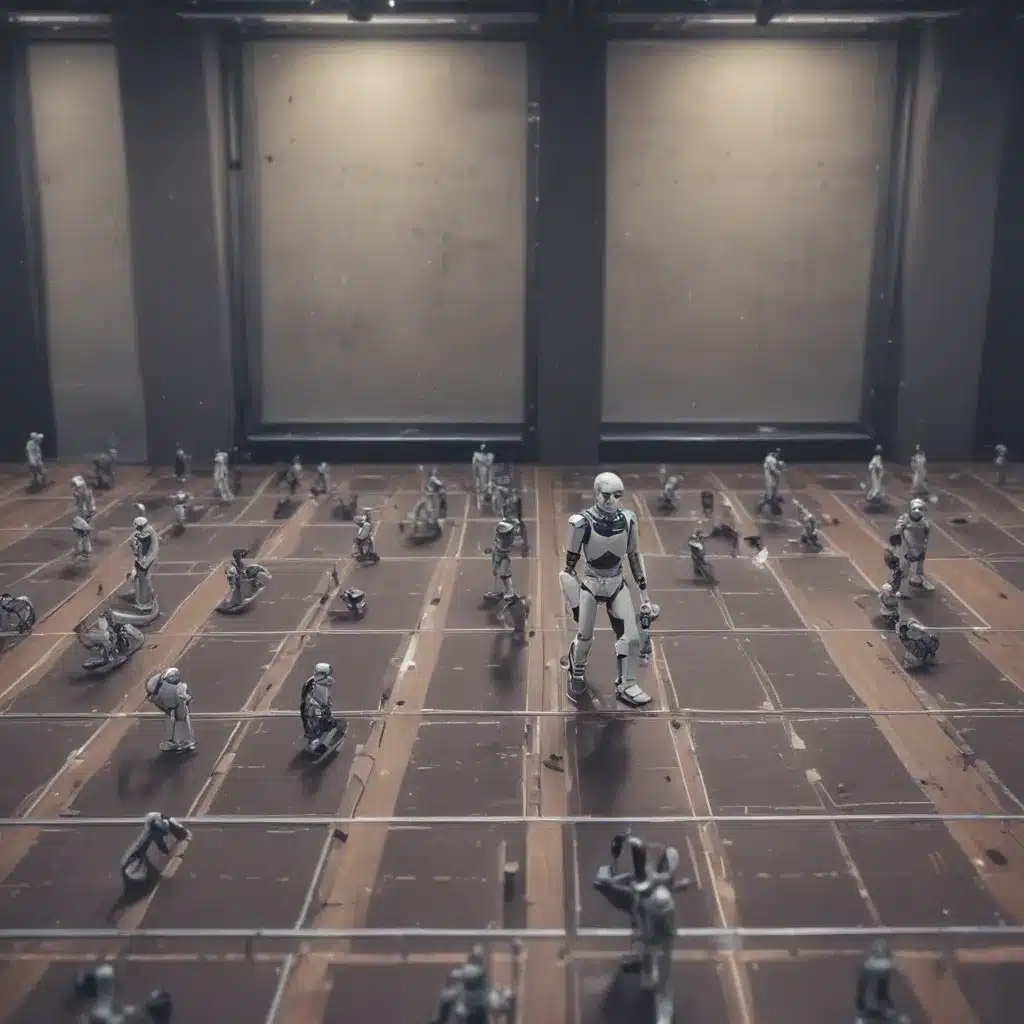

Reinforcement learning is an exciting and rapidly advancing field of artificial intelligence (AI) that focuses on how AI systems can learn to make decisions and take actions by interacting with their environment. The core idea behind reinforcement learning is that an AI agent, much like a human or animal, can learn through a process of trial and error, receiving feedback in the form of rewards or punishments for the actions it takes. By learning which actions lead to positive outcomes and which lead to negative ones, the AI agent can gradually improve its decision-making and become more effective at achieving its goals.

At the heart of reinforcement learning is the concept of the “agent” – the AI system that is learning and making decisions – and the “environment” – the world in which the agent operates and interacts. The agent observes the current state of the environment, selects an action to take, and then receives a reward or punishment in response. Based on this feedback, the agent can update its understanding of the environment and refine its decision-making process, ultimately becoming more skilled at navigating the environment and achieving its objectives.

One of the key advantages of reinforcement learning is its flexibility and adaptability. Unlike supervised learning, where an AI system is trained on a fixed dataset and learns to perform a specific task, reinforcement learning allows the agent to learn and adapt to dynamic, complex environments. This makes it well-suited for a wide range of applications, from robotics and game-playing to resource optimization and decision-making in business and finance.

The Key Components of Reinforcement Learning

Reinforcement learning systems typically consist of several key components:

-

The Agent: This is the AI system that is learning and making decisions. The agent observes the environment, takes actions, and receives rewards or punishments.

-

The Environment: This is the world in which the agent operates and interacts. The environment can be physical, such as a robot navigating a maze, or digital, such as a game or a financial market.

-

The State: This is the current situation or condition of the environment, as perceived by the agent. The state can include information about the agent’s current position, the state of the environment, or any other relevant factors.

-

The Action: This is the decision or behavior that the agent takes in response to the current state. The agent’s goal is to select actions that will maximize its rewards over time.

-

The Reward: This is the feedback that the agent receives from the environment, indicating the quality or desirability of the action it has taken. Rewards can be positive (indicating a good outcome) or negative (indicating a bad outcome).

-

The Policy: This is the decision-making strategy that the agent uses to select actions based on the current state. The agent’s goal is to learn an optimal policy that will maximize its long-term rewards.

-

The Value Function: This is a measure of the expected long-term reward that the agent can expect to receive by taking a particular action in a given state. The agent’s goal is to learn the optimal value function, which will guide its decision-making.

These components work together in a feedback loop, with the agent continuously observing the environment, taking actions, and receiving rewards or punishments, which it then uses to update its policy and value function, becoming more effective over time.

How Does Reinforcement Learning Work?

The basic process of reinforcement learning can be broken down into the following steps:

-

Observation: The agent observes the current state of the environment, taking in information about its current position, the state of the world, and any other relevant factors.

-

Action Selection: Based on the current state, the agent selects an action to take, using its current policy to guide its decision-making.

-

Reward/Punishment: The agent then receives a reward or punishment from the environment, based on the outcome of the action it took.

-

Policy Update: The agent uses the feedback it received to update its policy, learning which actions are likely to lead to positive outcomes and which are likely to lead to negative ones.

-

Value Update: The agent also updates its value function, refining its understanding of the long-term expected rewards associated with different actions and states.

-

Repeat: The agent then repeats this process, continually observing the environment, taking actions, and receiving feedback, gradually improving its decision-making over time.

This cycle of observation, action, feedback, and policy/value update is the core of reinforcement learning, and it allows the agent to learn and adapt to its environment through a process of trial and error.

Reinforcement Learning Algorithms

There are a number of different algorithms and techniques that can be used in reinforcement learning, each with its own strengths, weaknesses, and applications. Some of the most commonly used reinforcement learning algorithms include:

-

Q-Learning: Q-learning is a popular model-free reinforcement learning algorithm that learns the value of taking a particular action in a given state, known as the Q-value. By updating the Q-values based on the rewards received, the agent can learn an optimal policy for navigating the environment.

-

Deep Q-Learning: Deep Q-learning is a variation of Q-learning that uses a deep neural network to approximate the Q-function, allowing the agent to handle more complex, high-dimensional environments.

-

Policy Gradient Methods: Policy gradient methods focus on directly learning the policy, rather than the value function. These methods use gradient-based optimization to iteratively improve the policy, with the goal of maximizing the expected long-term reward.

-

Actor-Critic Methods: Actor-critic methods combine elements of value-based and policy-based approaches, using one neural network (the “actor”) to learn the policy and another (the “critic”) to learn the value function. This can lead to more stable and efficient learning.

-

Monte Carlo Methods: Monte Carlo methods use sampling and simulation to estimate the value of different actions and states, without relying on a model of the environment. These methods can be useful in complex environments where the dynamics are not well understood.

-

Temporal Difference Learning: Temporal difference (TD) learning is a class of reinforcement learning algorithms that update the value function based on the difference between the current estimate and the estimated future reward. TD methods can be more efficient and accurate than Monte Carlo methods in some situations.

Each of these algorithms has its own strengths and weaknesses, and the choice of algorithm will depend on the specific problem, the available computational resources, and the characteristics of the environment.

Applications of Reinforcement Learning

Reinforcement learning has a wide range of real-world applications, from gaming and robotics to finance and resource optimization. Here are some examples of how reinforcement learning is being used:

-

Game AI: Reinforcement learning has been used to create AI agents that can play complex games, such as chess, Go, and Dota 2, at a superhuman level. These agents learn by playing the game repeatedly and adjusting their strategies based on the feedback they receive.

-

Robotics and Control Systems: Reinforcement learning can be used to train robots to navigate complex environments, manipulate objects, and perform a variety of tasks. This can be particularly useful in situations where the environment is dynamic and unpredictable.

-

Resource Optimization: Reinforcement learning can be used to optimize the allocation of resources, such as energy, transportation, or manufacturing, by learning to make decisions that maximize efficiency and minimize waste.

-

Finance and Trading: Reinforcement learning can be used in financial applications, such as portfolio management and algorithmic trading, to learn optimal strategies for making investment decisions based on market conditions and historical data.

-

Healthcare and Medicine: Reinforcement learning can be used to optimize treatment plans, drug dosages, and other medical interventions by learning from patient data and outcomes.

-

Recommendation Systems: Reinforcement learning can be used to create personalized recommendation systems that learn to suggest products, content, or services that are tailored to the user’s preferences and behaviors.

-

Natural Language Processing: Reinforcement learning can be used to train language models and chatbots to engage in more natural and contextual conversations, by learning to generate responses that are appropriate and relevant to the user’s input.

These are just a few examples of the many ways that reinforcement learning is being applied in the real world. As the field continues to advance, we can expect to see even more innovative and impactful applications of this powerful AI technology.

The Challenges and Limitations of Reinforcement Learning

While reinforcement learning is a powerful and promising approach to AI, it also comes with a number of challenges and limitations that need to be addressed:

-

Exploration vs. Exploitation: One of the key challenges in reinforcement learning is balancing the need to explore new actions and strategies (to discover potentially better ones) with the need to exploit the current best strategy (to maximize short-term rewards). This can be a delicate balance, and getting it wrong can lead to suboptimal performance.

-

Credit Assignment: Determining which actions or decisions were responsible for a particular outcome can be challenging, especially in complex environments with many variables and delayed rewards. This “credit assignment” problem can make it difficult for the agent to learn effectively.

-

Sample Efficiency: Reinforcement learning algorithms often require a large number of trials and interactions with the environment to learn effectively. This can be a significant limitation, especially in real-world applications where data and computational resources may be scarce.

-

Scalability: As the complexity of the environment and the agent’s decision-making increases, the computational and memory requirements of reinforcement learning algorithms can become prohibitive. Scaling up these algorithms to handle large-scale, high-dimensional problems is an active area of research.

-

Generalization: Reinforcement learning agents can struggle to generalize their learned policies to new, previously unseen situations or environments. This can limit the practical applicability of these systems in the real world.

-

Safety and Robustness: Ensuring the safety and robustness of reinforcement learning agents is crucial, especially in applications where the consequences of poor decision-making can be severe. Addressing issues of safety, stability, and reliability is an important challenge in the field.

Despite these challenges, researchers and practitioners are actively working to address these limitations and advance the state of the art in reinforcement learning. Through continued innovation and development, we can expect to see even more powerful and capable reinforcement learning systems in the years to come.

The Future of Reinforcement Learning

As the field of reinforcement learning continues to evolve, we can expect to see a number of exciting developments and advancements in the years ahead. Some of the key trends and areas of focus in the future of reinforcement learning include:

-

Improved Sample Efficiency: Researchers are exploring ways to make reinforcement learning algorithms more sample-efficient, reducing the number of trials and interactions needed to learn effective policies. This could involve incorporating additional sources of information, such as expert demonstrations or simulated environments, or developing more sophisticated credit assignment and exploration strategies.

-

Scalable and Efficient Algorithms: There is a growing focus on developing reinforcement learning algorithms that can scale to handle larger, more complex problems, while maintaining computational and memory efficiency. This could involve advances in areas like deep learning, distributed computing, and hierarchical decision-making.

-

Generalization and Transfer Learning: Researchers are working to improve the ability of reinforcement learning agents to generalize their learned policies to new, previously unseen situations and environments. This could involve developing more flexible and adaptive policy representations, or leveraging transfer learning techniques to apply knowledge gained in one domain to another.

-

Safe and Reliable Reinforcement Learning: Ensuring the safety and reliability of reinforcement learning systems is a critical priority, especially as these technologies are applied in high-stakes domains like healthcare, transportation, and finance. This could involve developing new techniques for ensuring stability, robustness, and alignment with human values and preferences.

-

Multiagent and Cooperative Reinforcement Learning: As reinforcement learning systems become more sophisticated, there is growing interest in developing agents that can collaborate and coordinate with one another, either in competitive or cooperative environments. This could lead to new breakthroughs in areas like multi-robot coordination, collective decision-making, and social intelligence.

-

Interpretability and Explainability: As reinforcement learning systems become more complex and opaque, there is a growing need to develop techniques for making these systems more interpretable and explainable, so that their decision-making processes can be understood and validated by humans.

-

Integration with Other AI Techniques: Reinforcement learning is likely to become increasingly integrated with other AI techniques, such as natural language processing, computer vision, and planning, to create more versatile and capable AI systems that can tackle a wide range of real-world problems.

Overall, the future of reinforcement learning looks bright, with the potential to revolutionize a wide range of industries and applications. As the field continues to evolve and advance, we can expect to see even more exciting and impactful developments in the years to come.

Conclusion

Reinforcement learning is a powerful and versatile approach to AI that has the potential to transform a wide range of industries and applications. By allowing AI systems to learn through a process of trial and error, reinforcement learning enables them to adapt to complex, dynamic environments and make increasingly effective decisions over time.

While reinforcement learning does come with its own set of challenges and limitations, researchers and practitioners are actively working to address these issues and push the boundaries of what is possible. As the field continues to evolve, we can expect to see even more impressive and impactful applications of this revolutionary AI technology.

Whether you’re interested in gaming, robotics, finance, or any other domain, reinforcement learning is a field that is well worth exploring. By understanding the key principles and techniques of reinforcement learning, you’ll be better equipped to navigate the rapidly changing landscape of AI and harness its full potential.

So, if you’re ready to dive deeper into the world of reinforcement learning and discover how it can transform the way we approach problem-solving and decision-making, I encourage you to continue exploring this fascinating and rapidly advancing field. The future is bright, and the possibilities are endless!