What is Photogrammetry?

Photogrammetry is the process of generating 3D models from 2D photographs. It involves taking hundreds or even thousands of detailed photographs of an object or environment from all angles. These photographs are then fed into photogrammetry software, which analyzes the pixels in the images to calculate the 3D structure and texture of the subject.

The software matches common points between photos to determine the camera positions and orientations. It then uses this information to generate a dense 3D point cloud, which is a collection of data points in 3D space that define the shape of the subject. From this point cloud, a 3D mesh can be created, which is wrapped with the photographic textures to create an extremely detailed and lifelike 3D model.

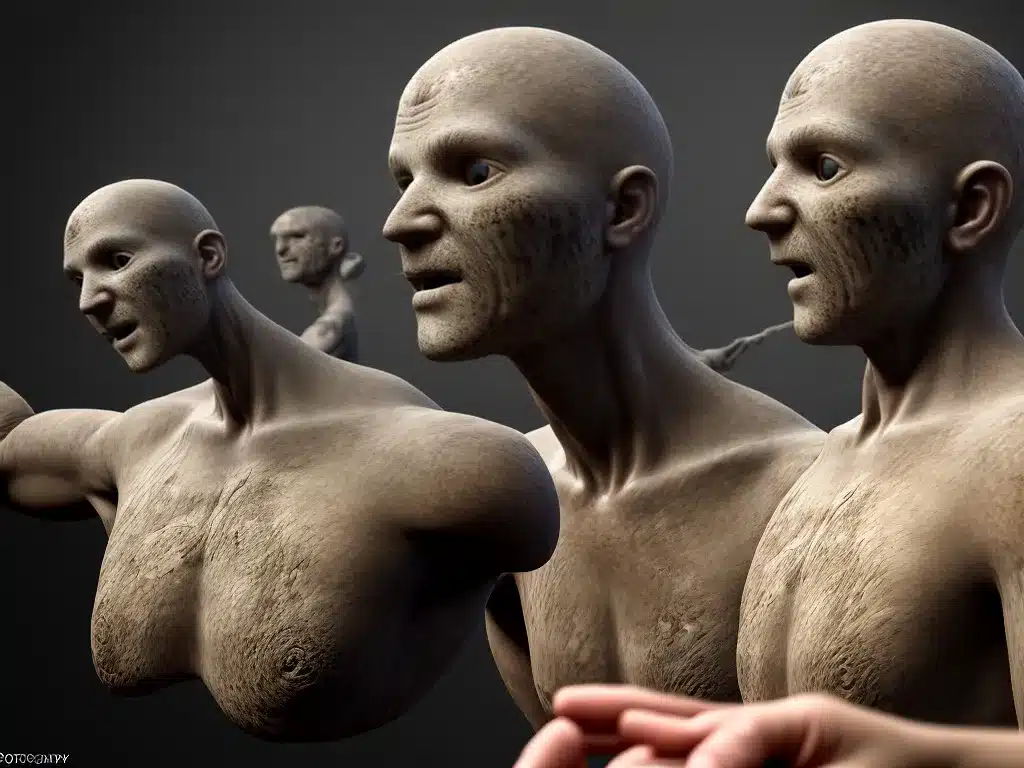

How Photogrammetry Creates Realistic 3D Game Assets

Photogrammetry allows game developers to rapidly scan real-world objects, locations, and even people to create in-game assets with a high level of visual fidelity. The photographic textures retain all the fine details and imperfections of the real subject, bringing an unprecedented level of realism to 3D models.

Some of the benefits of using photogrammetry for games include:

-

Detail – Photogrammetry models have tiny details accurately reconstructed from photos, like cracks, grains, stains, etc. This level of high-frequency detail is hard to model by hand.

-

Realism – The photographic textures ground the models in reality, with accurate colors, lighting, and material qualities. This helps with suspension of disbelief.

-

Efficiency – Photogrammetry is much faster than manual modeling. Entire environments can be scanned and reconstructed in days or weeks rather than months.

-

Accessibility – Photogrammetry democratizes detailed scanning. It allows indie developers and modders to rapidly create assets without a huge art budget.

Photogrammetry vs Traditional 3D Modeling

While traditional 3D modeling relies on an artist manually shaping polygons to sculpt a digital asset, photogrammetry generates models straight from photographic reference. This leads to some key differences:

-

Detail – Photogrammetry excels at tiny high-frequency details, whereas modelers often skip or approximate small details due to effort.

-

Realism – The photographic source gives photogrammetry inherent realism. Traditional modelers have to manually recreate real-world imperfections.

-

Efficiency – Modeling by hand is extremely time-intensive compared to automated photogrammetry reconstruction.

-

Control – Modelers have more control over the shape and topology. Photogrammetry is limited by scan resolution and camera angles.

-

Accessibility – Photogrammetry has a much lower barrier to entry. Modeling requires specialized skills built over years.

So in summary, photogrammetry offers unmatched realism and detail but traditional modeling provides greater creative control and flexibility. Many studios use both techniques synergistically.

Case Study: Star Wars Battlefront II by DICE

DICE set a new standard for photorealism in games using photogrammetry extensively in Star Wars Battlefront II. They collaborated with Lucasfilm to scan movie props, costumes, terrain elements, and actors to bring iconic Star Wars assets into the Frostbite engine.

According to DICE, they captured over 60 terabytes of photographic data during the development process. Many recognizable Star Wars environments like the Death Star, Hoth, Tatooine, and Sullust were constructed using thousands of photogrammetric scans.

DICE used photogrammetry for major characters like Admiral Ackbar and Leia Organa to make them look like their movie counterparts. They also scanned forest floors, rock formations, metal panels, and other textures that populate larger environments.

The results were stunningly realistic and immersive Star Wars locales that looked like they were ripped directly from the films. Photogrammetry was a key driver of the game’s graphical achievements and helped recreate the Star Wars universe in unprecedented detail.

The Future of Photogrammetry

While photogrammetry in games is still evolving, a few trends point to where it is heading:

-

Hybrid pipelines – Most studios now use a mix of scans and traditional modeling rather than photogrammetry alone. The two complement each other.

-

Better software – Programs like RealityCapture, Metashape, and 3DF Zephyr are making photogrammetry more automated and accessible.

-

Mobile scanning – Handheld scanning devices like Polycam can quickly capture photogrammetry scans anywhere.

-

Procedural detail – Techniques like scan-to-model can convert scans into parameterized and editable assets.

-

4D scanning – Companies like 4Dviews are building rigs to capture changing expressions over time, enabling photoreal digital humans.

As tools and techniques improve, photogrammetry will become an increasingly indispensable part of the quest for greater realism in video games. It is already revolutionizing AAA production pipelines and allowing smaller teams to punch above their weight visually. The future looks bright and photorealistic as photogrammetry matures and evolves.