The Rise of AI-Generated Content: A Threat to Authenticity?

I recently found myself in a peculiar predicament. As an avid consumer of online content, I had grown accustomed to the seamless flow of information, ideas, and narratives that seemed to pour forth from the digital realm. However, a nagging sense of unease had been creeping up on me, a subtle feeling that something wasn’t quite right. It was as if the words I was reading, the stories I was absorbing, were somehow lacking in authenticity, a faint whiff of artificiality permeating the very fabric of the content.

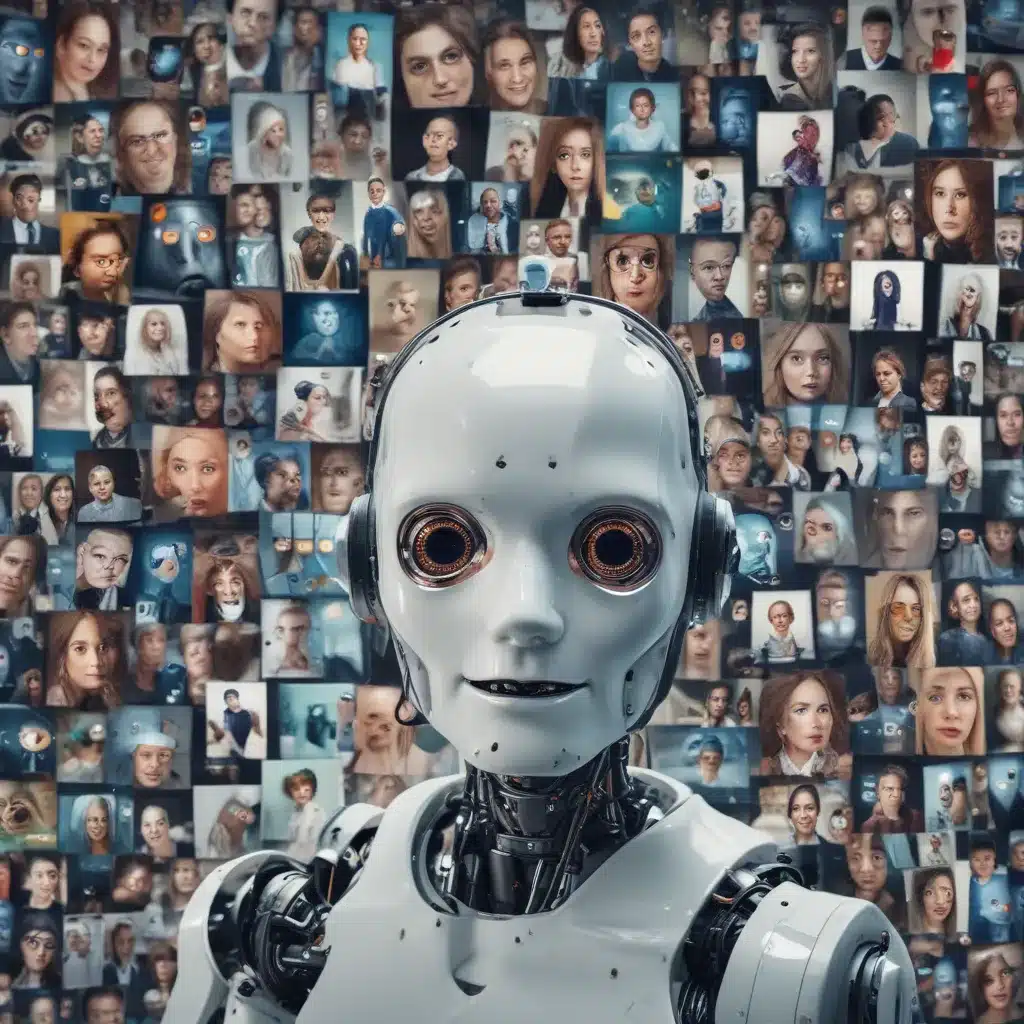

This suspicion was further fueled by the rapid advancements in artificial intelligence (AI) and the growing prevalence of AI-generated content. From news articles to social media posts, from product descriptions to creative works, the line between human-generated and machine-generated content had become increasingly blurred. The question that now loomed large in my mind was this: How can we, as discerning readers and content consumers, navigate this landscape and identify the telltale signs of AI-generated content?

Unmasking the Imposter: Defining AI-Generated Content

To begin, it’s essential to understand what we mean by “AI-generated content.” This term refers to any textual, visual, or multimedia content that has been produced or generated, in whole or in part, by artificial intelligence systems. These systems, powered by advanced language models, machine learning algorithms, and generative capabilities, can create content that can be remarkably similar to human-generated content.

The rise of AI-powered content creation tools, such as GPT-3, DALL-E, and Midjourney, has further fueled this trend. These tools have democratized content creation, allowing even those with limited creative skills or technical expertise to generate high-quality text, images, and other forms of digital media. While this democratization has its benefits, it also raises concerns about the potential for deception and the erosion of trust in online information.

Navigating the Landscape: Identifying AI-Generated Content

As the use of AI-generated content becomes more widespread, the need to distinguish it from human-generated content becomes increasingly crucial. Fortunately, there are several techniques and strategies that can help us identify the telltale signs of AI-generated content. Here are some of the key indicators to watch for:

Linguistic Patterns

One of the most reliable ways to detect AI-generated content is to analyze the linguistic patterns and stylistic features of the text. AI-generated text, while often remarkably coherent and fluent, may exhibit certain idiosyncrasies that set it apart from human-generated content. These can include:

- Unusual word choices or phrase constructions

- Repetitive or formulaic sentence structures

- Lack of nuance, emotion, or personal touch

- Inconsistent or unnatural use of idioms, metaphors, and other figurative language

By closely examining the language used in a piece of content, you can often discern whether it bears the hallmarks of AI-generated text or the more organic, varied, and contextually appropriate language of a human writer.

Factual Accuracy and Coherence

Another key indicator of AI-generated content is the accuracy and coherence of the information presented. While AI systems have become increasingly adept at gathering and synthesizing information, they may still struggle with maintaining consistent, fact-based narratives, especially when dealing with complex or nuanced topics.

Look for:

– Factual errors or inconsistencies

– Logical gaps or contradictions within the content

– Lack of depth or nuance in the treatment of a subject

– Overconfident or definitive statements on topics where uncertainty or ambiguity would be more appropriate

By critically examining the content for these types of issues, you can gain valuable insights into whether the information is the product of a human mind or an AI system.

Originality and Creativity

One of the hallmarks of human-generated content is its unique, creative, and original nature. AI-generated content, while increasingly sophisticated, may still struggle to match the depth, complexity, and originality of human-created works.

Pay attention to:

– Lack of original ideas or perspectives

– Reliance on common tropes, cliches, or stock phrases

– Repetition of content or recycling of existing information

– Absence of personal anecdotes, insights, or unique perspectives

By assessing the level of originality and creativity in the content, you can gain a better understanding of whether it was produced by a human or an AI system.

Contextual Cues and Metadata

Finally, contextual cues and metadata can also provide valuable clues about the source of the content. Look for:

– The presence or absence of author bylines, biographies, or other identifying information

– The publication or platform where the content appears

– The date and time of publication

– Any disclaimers or disclosures about the use of AI in content creation

By considering these contextual factors, you can gain a more holistic understanding of the content and its origins, further informing your assessment of whether it is human-generated or AI-generated.

The Ethical Implications of AI-Generated Content

As the prevalence of AI-generated content continues to grow, it is essential to consider the ethical implications of this phenomenon. On one hand, the democratization of content creation enabled by AI tools can be seen as a positive development, empowering individuals and organizations to express themselves and share their ideas more easily. However, the potential for deception and the erosion of trust in online information pose significant challenges.

The Threat of Deception

One of the most concerning aspects of AI-generated content is its potential to deceive. If AI-generated content is presented as human-created, it can lead to the spread of misinformation, propaganda, and even deepfakes – realistic but fabricated media content. This can have far-reaching consequences, from the undermining of democratic discourse to the exploitation of vulnerable individuals.

As such, it is crucial that we, as content consumers, remain vigilant and develop the necessary skills to identify AI-generated content. Additionally, content creators and platforms have a responsibility to be transparent about the use of AI in content creation and to implement robust safeguards against deception.

The Erosion of Trust in Online Information

The proliferation of AI-generated content also poses a broader threat to the trust and credibility of online information. As the line between human-generated and machine-generated content becomes increasingly blurred, the public may start to view all online content with skepticism, making it more difficult for legitimate, truthful information to be disseminated and trusted.

This erosion of trust can have far-reaching implications, from the undermining of critical public discourse to the weakening of institutions and the democratic process. It is therefore essential that we, as a society, work to address this challenge and find ways to restore trust in online information.

The Path Forward: Collaboration and Transparency

Addressing the challenges posed by AI-generated content will require a multifaceted approach, one that involves collaboration between content creators, platforms, and the public.

Empowering Content Consumers

As content consumers, we have a crucial role to play in navigating this landscape. By developing the skills to identify AI-generated content and by demanding transparency from content creators and platforms, we can help to restore trust and preserve the integrity of online information.

This will require ongoing education and the development of critical thinking skills, as well as the active engagement of the public in shaping the policies and practices that govern the use of AI in content creation.

Responsible Content Creation and Platform Policies

Content creators and platforms also have a significant responsibility in addressing the challenges posed by AI-generated content. This may involve implementing clear policies around the use of AI in content creation, requiring explicit disclosure of AI-generated content, and developing robust safeguards against deception.

Additionally, content creators and platforms should invest in research and development to improve the detection and mitigation of AI-generated content, ensuring that the public can trust the information they consume.

Collaboration and Transparency

Ultimately, addressing the challenges posed by AI-generated content will require a collaborative effort between content consumers, creators, and platforms. By working together to develop best practices, implement transparent policies, and empower the public, we can navigate this complex landscape and preserve the integrity of online information.

Conclusion: Embracing the Future, Preserving Authenticity

As the use of AI in content creation continues to evolve, it is clear that we are facing a critical juncture. On one hand, the democratization of content creation enabled by AI presents exciting opportunities for self-expression, creativity, and the dissemination of ideas. On the other hand, the potential for deception and the erosion of trust in online information pose significant challenges that must be addressed.

By developing the skills to identify AI-generated content, demanding transparency from content creators and platforms, and engaging in collaborative efforts to shape the policies and practices that govern the use of AI, we can navigate this landscape and preserve the authenticity and integrity of online information.

It is a delicate balance, but one that is essential if we are to maintain a thriving, vibrant, and trustworthy digital ecosystem – one that serves the interests of individuals, communities, and society as a whole. The path forward may not be easy, but the stakes are too high to ignore. Together, we must rise to the challenge and ensure that the digital realm remains a space where truth, authenticity, and human expression can thrive.