Neural Rendering: How AI is Revolutionizing 3D Graphics

Introduction

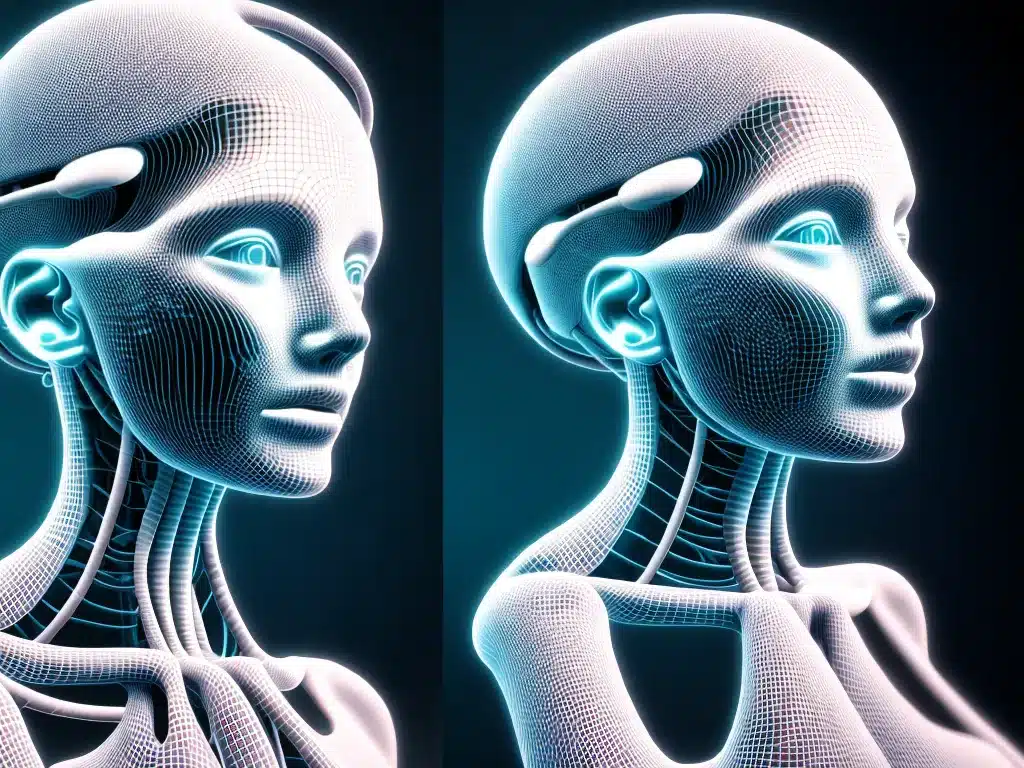

The field of computer graphics has undergone a revolution in recent years with the rise of neural rendering. Neural rendering utilizes artificial intelligence (AI), specifically deep learning, to generate highly realistic and detailed 3D graphics. This approach holds tremendous promise for creating immersive virtual worlds for gaming, film, and beyond. In this article, I will provide an in-depth look at how neural rendering works and the ways it is transforming computer graphics.

What is Neural Rendering?

Neural rendering refers to the use of deep neural networks to render 3D graphics and scenes. Traditional rendering relies on complex physical simulations to determine how light interacts with materials and surfaces. Neural rendering instead uses deep learning algorithms to learn these physical interactions from data.

Specifically, neural rendering trains deep convolutional neural networks (CNNs) on large datasets of 3D shapes, scenes, and renderings. The networks learn to infer photo-realistic images from limited input data like rough 3D shapes or semantic scene layouts. This allows high-quality 3D renderings to be generated orders of magnitude faster than with physical simulation techniques.

Some key capabilities enabled by neural rendering include:

- Novel view synthesis – Generating photorealistic views of a scene from new camera angles

- Scene completion – Plausibly filling in incomplete 3D data

- Relighting – Changing the lighting of a rendered scene

- Neural textures – Procedurally generating high-quality texture maps

Advantages over Traditional Rendering

Neural rendering offers several major advantages over traditional rendering pipelines:

- Speed – Neural networks can render photorealistic images in real-time, while traditional techniques like ray tracing require minutes per frame. This enables true photorealism in gaming and VR.

- Quality – Neural renderings are often more detailed and realistic than traditional renders. The networks implicitly learn global illumination effects difficult to simulate physically.

- Flexibility – Neural models can infer complete 3D scenes from limited input data like semantic labels or low-poly geometry. This enables quick iteration and conceptual prototyping.

In essence, neural rendering trades physically-based simulation for data-driven learning. With enough data, neural networks can realistically emulate the physical world while being far more flexible and performant.

Neural Rendering Methods

Many different techniques fall under the umbrella of neural rendering. Here are some of the most impactful approaches:

Novel View Synthesis

Novel view synthesis refers to generating photorealistic views of a scene from arbitrary new camera angles. Neural networks are trained to infer lifelike renderings given camera parameters and an input view. This allows navigating around a fixed 3D scene.

Key methods: DeepBlending, Appearance Flow, PixelSynth

Scene Completion

Scene completion networks take incomplete 3D data like low-polygon meshes or partial point clouds and fill in plausible details. The completed 3D data can then be rendered from any viewpoint. This allows high-quality renderings from limited 3D input.

Key methods: GRNet, CraterNet, SparseConductorNet

Neural Textures

Rather than manually creating texture maps, neural textures use generative networks to synthesize complex, detailed texture maps procedurally. The networks are trained on datasets of real material images like wood, metal, or stone. Neural textures can capture fine details at infinite resolution.

Key methods: TexSynth, GANtures, Texture Fields

Relighting

Relighting techniques directly modify the lighting of rendered scenes using neural networks. This allows interactive editing of lighting without needing to re-render the entire scene. It enables cinematic lighting workflows for gaming and virtual production.

Key methods: Deep Shading, GANpaint Studio, RealTimeEdit

Full Scene Synthesis

The most advanced neural rendering techniques train end-to-end networks to generate complete 3D scenes from scratch. These networks implicitly learn global illumination and directly output the final rendered image.

Key methods: GRASS, Pi-GAN, GRAF

Real-World Applications

Neural rendering is moving out of research and into production across many domains:

-

Gaming – Real-time neural rendering enables cinema-quality graphics in interactive experiences. NVIDIA’s RTXGI uses AI to add realistic global illumination effects in games.

-

Virtual production – Neural rendering can quickly generate varied photorealistic environments from limited assets for virtual filmmaking. Shows like The Mandalorian use these techniques.

-

Concept art – Artists can quickly iterate scene concepts without needing full asset production. Neural models can generate detailed environments from rough sketches.

-

CGI and VFX – AI assistance for asset creation, animation, and rendering improves efficiency. Tools like Runway ML simplify integrating neural rendering into existing pipelines.

-

Simulation – High-quality rendered simulations have applications in architecture, car design, and product visualization. Neural rendering drastically cuts the time and cost to create them.

-

AR/VR – Neural techniques like view synthesis and relighting enable photorealistic rendering in real-time 3D experiences on limited hardware like phones and headsets.

The Future of Neural Rendering

Neural rendering is still an emerging field with ample room for improvement. Here are some promising directions for future research:

-

Higher image fidelity – More complex network architectures, better training data, and improved loss functions will continue to enhance image quality.

-

Larger scenes – Current methods are limited to small scenes. New approaches need to handle large-scale, detailed environments.

-

Animation – Extending neural rendering to animated scenes is an open challenge. This likely requires new network architectures and training procedures.

-

Interactive editing – More work is needed to enable intuitive user control over scene parameters like lighting, materials, and geometry.

-

Multimodal inputs – Networks that can render based on various inputs like sketches, natural language descriptions, or CAD models could unlock new creative workflows.

Conclusion

Neural rendering is a rapidly evolving field that is making photorealistic, interactive 3D graphics possible. Deep learning has enabled big advances in the speed, quality, and flexibility of rendering. As research continues, neural rendering promises to become a crucial part of the computer graphics stack enabling new creative possibilities. The future looks bright for leveraging AI to generate compelling virtual worlds.