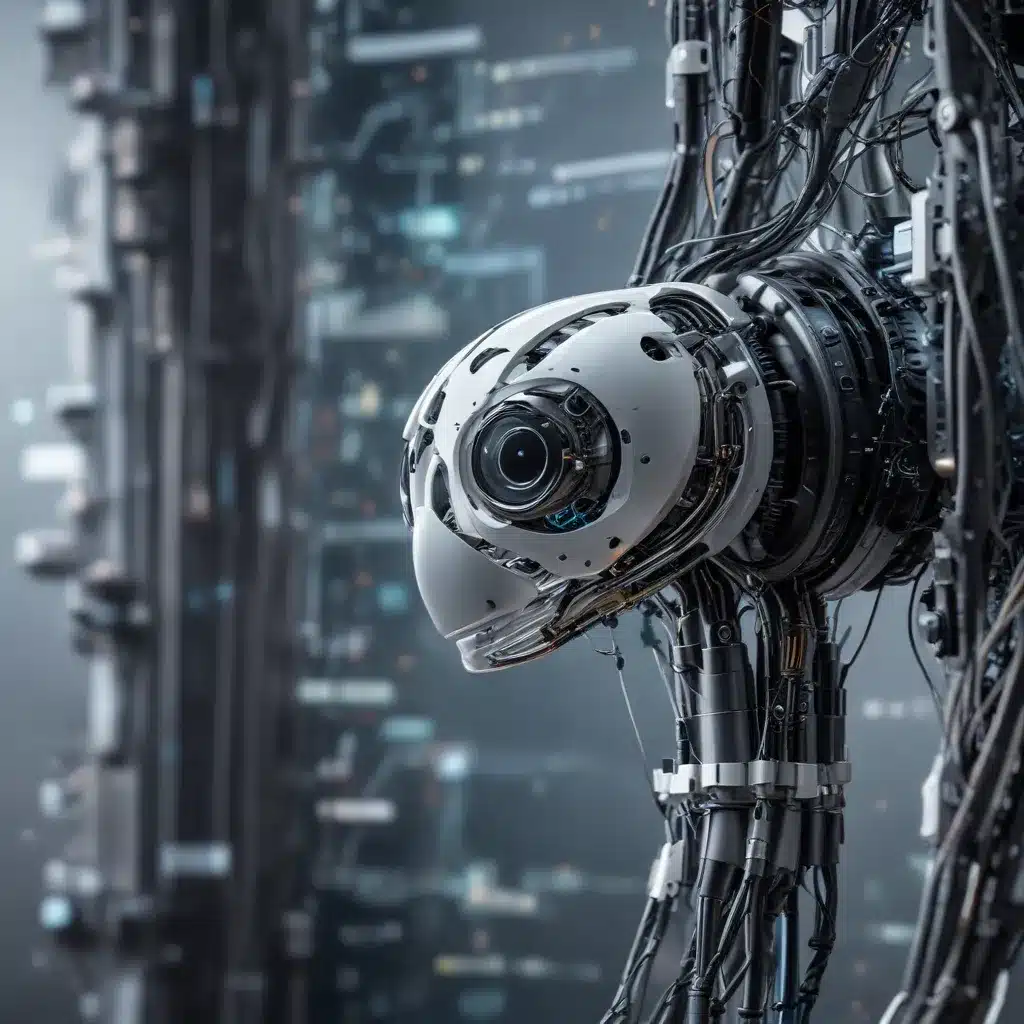

Embracing the Promise and Perils of Edge AI

The rapid rise of artificial intelligence (AI) has unlocked remarkable opportunities across various industries, from facilitating healthcare diagnoses to enabling human connections through social media and creating labor efficiencies through automated tasks. However, these rapid changes also raise profound ethical concerns. AI systems have the potential to embed biases, contribute to climate degradation, and threaten human rights – risks that have already begun to compound on top of existing inequalities, resulting in further harm to marginalized groups.

In no other field are ethical considerations more critical than in the realm of artificial intelligence. These general-purpose technologies are reshaping the way we work, interact, and live, ushering in a transformative era not seen since the deployment of the printing press six centuries ago. While AI technology brings major benefits in many areas, without the proper ethical guardrails, it risks reproducing real-world biases and discrimination, fueling divisions, and threatening fundamental human rights and freedoms.

UNESCO’s Landmark Recommendation on the Ethics of AI

To address these challenges, UNESCO has led the international effort to ensure that science and technology develop with strong ethical principles. In November 2021, UNESCO produced the first-ever global standard on AI ethics – the ‘Recommendation on the Ethics of Artificial Intelligence.’ This groundbreaking document is applicable to all 194 member states of UNESCO and places the protection of human rights and dignity at its cornerstone.

The Recommendation interprets AI broadly as systems with the ability to process data in a way that resembles intelligent behavior. This definition is crucial, as the rapid pace of technological change would quickly render any fixed, narrow definition outdated, making future-proof policies infeasible.

The Recommendation establishes a comprehensive framework of ethical principles and policy action areas to guide the responsible development and deployment of AI. Key tenets include:

-

Respect, Protection, and Promotion of Human Rights and Fundamental Freedoms: AI systems must not infringe on human rights and should respect individual privacy, human dignity, and the principles of justice and equality.

-

Ensuring Safe, Secure, and Trustworthy AI: The use of AI systems must not go beyond what is necessary to achieve a legitimate aim. Risk assessment should be used to prevent harms and address vulnerabilities to attacks.

-

Transparency and Explainability: The ethical deployment of AI systems depends on their transparency and explainability, balanced against other principles such as privacy, safety, and security.

-

Accountability and Human Oversight: Member States must ensure that AI systems do not displace ultimate human responsibility and accountability.

-

Environmental and Societal Wellbeing: AI technologies should be assessed against their impacts on sustainability, including the UN’s Sustainable Development Goals.

-

Public Understanding and Inclusive Approaches: Efforts must be made to promote social justice, fairness, and non-discrimination through open, accessible education and civic engagement.

Implementing the Recommendation: UNESCO’s Practical Strategies

While the ethical principles outlined in the Recommendation are crucial, recent movements in AI ethics have emphasized the need to move beyond high-level principles and toward practical strategies for implementation. UNESCO has developed several tools and initiatives to help Member States translate the Recommendation into action:

1. Readiness Assessment Mechanism (RAM)

The RAM is designed to help assess whether Member States are prepared to effectively implement the Recommendation. It will help them identify their status of preparedness and provide a basis for UNESCO to custom-tailor its capacity-building support.

2. Ethical Impact Assessment (EIA)

EIA is a structured process that helps AI project teams, in collaboration with affected communities, to identify and assess the impacts an AI system may have. It allows them to reflect on its potential impact and identify needed harm prevention actions.

3. Women4Ethical AI

This is a new collaborative platform to support governments and companies’ efforts to ensure that women are represented equally in both the design and deployment of AI. The platform’s members will share research and contribute to a repository of good practices.

4. Business Council for Ethics of AI

This is a collaborative initiative between UNESCO and companies operating in Latin America that are involved in the development or use of AI. The Council serves as a platform for companies to come together, exchange experiences, and promote ethical practices within the AI industry.

Addressing Pervasive Biases and Discrimination in AI

The use of AI in various domains, such as judicial systems, culture, and autonomous vehicles, raises significant ethical questions that require careful consideration. For instance, examples of gender bias in AI, originating from stereotypical representations deeply rooted in our societies, highlight the need for concerted efforts to ensure responsible and equitable AI development and deployment.

The Recommendation on the Ethics of AI provides a comprehensive framework to address these challenges, emphasizing the importance of transparency, accountability, and inclusive approaches. By promoting social justice, fairness, and non-discrimination, UNESCO aims to ensure that the benefits of AI are accessible to all, without exacerbating existing inequalities or causing unintended harm.

Navigating the Evolving Landscape of Edge AI Governance

As the adoption of intelligent edge devices continues to accelerate, the need for robust governance frameworks becomes increasingly critical. Edge AI, which refers to the deployment of AI capabilities at the edge of a network (closer to the data source) rather than in a centralized cloud, offers numerous advantages, such as improved response times, reduced data transmission costs, and enhanced privacy and security.

However, the rapid proliferation of edge AI also introduces new ethical and regulatory challenges that must be addressed to ensure responsible development and deployment. These challenges include:

-

Data Governance and Privacy: Ensuring the proper collection, storage, and use of data at the edge, while respecting individual privacy and data rights.

-

Safety and Security: Mitigating the potential risks of edge AI systems, including safety hazards, cybersecurity vulnerabilities, and the possibility of misuse or malicious exploitation.

-

Transparency and Explainability: Enhancing the transparency and explainability of edge AI systems, allowing for informed oversight and accountability.

-

Environmental Impact: Assessing and minimizing the environmental footprint of edge AI deployments, particularly in terms of energy consumption and resource utilization.

-

Equity and Inclusiveness: Ensuring that the development and deployment of edge AI solutions do not exacerbate existing societal inequalities or create new forms of discrimination.

To effectively navigate this evolving landscape, a comprehensive governance framework informed by the principles of the UNESCO Recommendation on the Ethics of AI is essential. This framework should involve close collaboration between policymakers, regulators, industry stakeholders, and civil society to establish clear guidelines, standards, and best practices for the responsible development and deployment of edge AI technologies.

Empowering Users and Building Trust

A crucial aspect of edge AI governance is empowering users and building trust in these emerging technologies. This requires:

-

Transparency and Explainability: Ensuring that edge AI systems are designed to be transparent and explainable, enabling users to understand how decisions are made and maintain a sense of control.

-

User Consent and Control: Implementing robust mechanisms for user consent and control over the collection and use of their data, respecting individual privacy and autonomy.

-

Ongoing Monitoring and Evaluation: Establishing mechanisms for the continuous monitoring and evaluation of edge AI systems, with the ability to identify and address any unintended consequences or emerging risks.

-

Inclusive Design and Development: Engaging a diverse range of stakeholders, including underrepresented groups, in the design and development of edge AI solutions to ensure they meet the needs and reflect the perspectives of all users.

-

Public Education and Awareness: Investing in public education and awareness campaigns to help users understand the capabilities and limitations of edge AI, as well as their rights and responsibilities in interacting with these technologies.

By prioritizing these elements, the development and deployment of edge AI can foster user trust, empowerment, and meaningful participation in the shaping of these transformative technologies.

Collaborating for a Responsible Future

Addressing the ethical and regulatory challenges of edge AI requires a collaborative effort involving policymakers, industry leaders, researchers, and civil society organizations. By working together, we can develop and implement effective governance frameworks that uphold the principles of the UNESCO Recommendation on the Ethics of AI, ensuring the responsible development and deployment of intelligent edge devices.

Key steps in this collaborative process include:

-

Developing Harmonized Standards and Guidelines: Establishing internationally recognized standards and guidelines for the design, deployment, and operation of edge AI systems, drawing on the expertise and perspectives of diverse stakeholders.

-

Fostering Multi-Stakeholder Dialogues: Facilitating ongoing dialogues and knowledge-sharing platforms that bring together policymakers, industry leaders, researchers, and civil society representatives to collectively navigate the evolving landscape of edge AI governance.

-

Strengthening Regulatory Frameworks: Updating existing regulations or developing new policies to address the unique challenges posed by edge AI, ensuring the protection of individual rights, public safety, and environmental sustainability.

-

Promoting Responsible Innovation: Incentivizing and supporting the development of edge AI solutions that prioritize ethical considerations, user empowerment, and societal wellbeing, rather than maximizing profit or efficiency at the expense of these critical factors.

-

Investing in Education and Capacity Building: Dedicating resources to educate the public, train professionals, and build the necessary skills and knowledge to navigate the complexities of edge AI governance effectively.

By embracing a collaborative and comprehensive approach to edge AI governance, we can harness the transformative potential of these technologies while mitigating the risks and ensuring that the benefits are equitably distributed. The IT Fix blog is committed to providing ongoing coverage and insights on this evolving landscape, empowering our readers to navigate the complexities of edge AI with confidence and responsibility.