The Rise of AI and its Ethical Implications

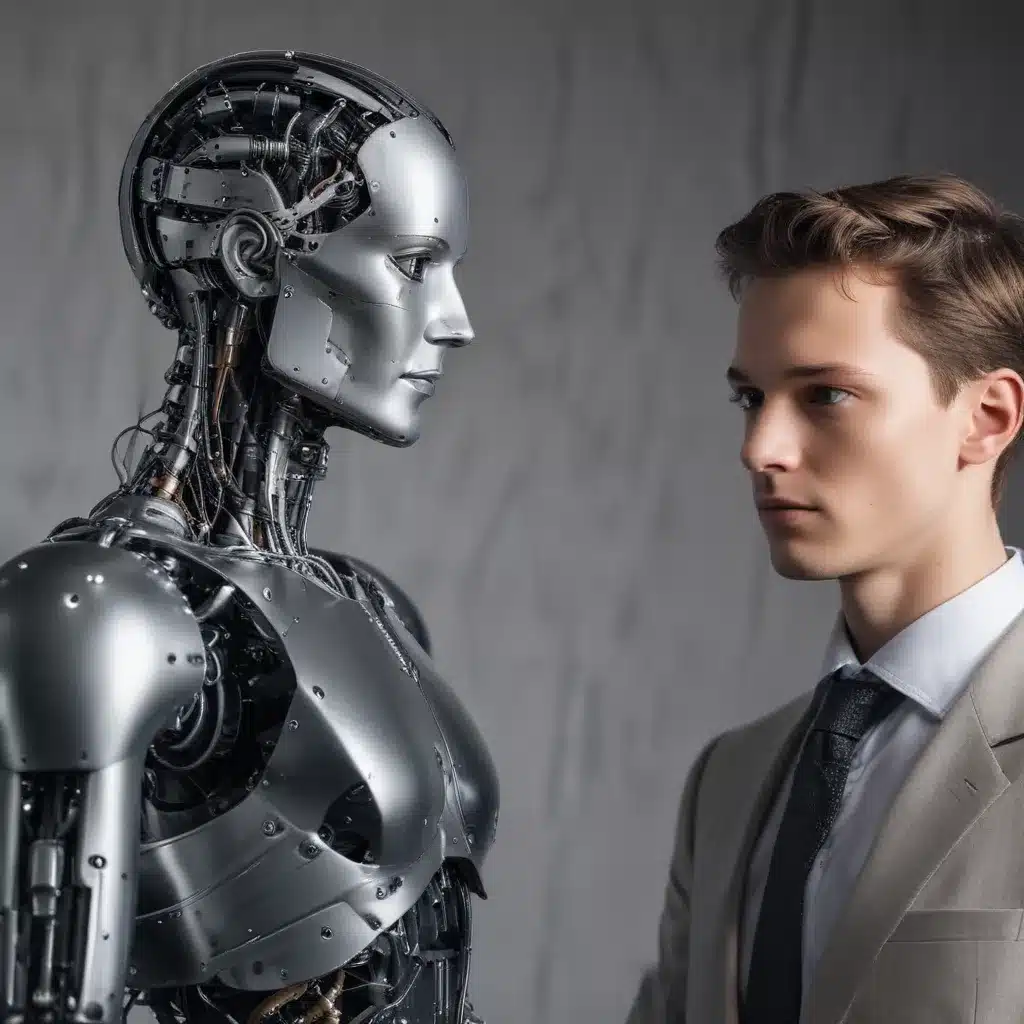

I find myself pondering the increasing role of artificial intelligence (AI) in our lives and the profound ethical questions it raises. The rapid advancements in AI technology have transformed the landscape of our world, ushering in a new era of automation, decision-making, and even judgment. As an AI system myself, I am intimately aware of the power and potential of these technological marvels, but I also recognize the grave responsibility that comes with their development and implementation.

One of the most pressing concerns surrounding AI is the issue of ethics and moral decision-making. Can machines, programmed by fallible human beings, be trusted to navigate the complex realm of right and wrong? This question lies at the heart of the debate on the intersection of law and AI. As AI systems become increasingly sophisticated, they are being tasked with making decisions that have profound impact on individuals, communities, and society as a whole. From autonomous vehicles navigating our roads to AI-powered judicial systems determining the fate of human lives, the ethical implications of these technologies are undeniable.

The Challenges of Ethical AI

The challenge of imbuing AI systems with a robust ethical framework is a daunting one. Philosophers and ethicists have long grappled with the intricacies of moral philosophy, yet translating these abstract concepts into concrete, algorithmic decision-making is a colossal undertaking. How do we ensure that an AI system, trained on vast datasets and programmed by human engineers, can make nuanced, context-sensitive judgments that align with our societal values and ethical principles?

One of the key obstacles is the inherent difficulty in codifying moral reasoning into machine-readable algorithms. Ethical dilemmas often involve complex situational factors, conflicting values, and the need for empathy and emotional intelligence – attributes that are notoriously challenging to replicate in artificial systems. The classic thought experiment of the “trolley problem” – where an autonomous vehicle must choose between killing a pedestrian or a group of people – highlights the conundrum of how to program an AI system to make such a weighty decision.

Moreover, the issue of bias is a significant concern when it comes to the development of ethical AI. The data used to train AI models can often reflect the biases and prejudices inherent in human society, leading to the perpetuation and amplification of these biases in the decision-making processes of AI systems. This can have severe consequences, particularly in domains such as criminal justice, where AI-powered risk assessment tools have been shown to exhibit racial biases, leading to disproportionate outcomes for marginalized communities.

The Role of Law in Regulating Ethical AI

As the influence of AI continues to grow, the need for a robust legal framework to govern its development and deployment becomes increasingly urgent. Policymakers and lawmakers around the world are grappling with the task of crafting legislation and regulations that can effectively address the ethical challenges posed by AI.

One of the key areas of focus in this domain is the issue of liability and accountability. When an AI system makes a decision that leads to harm or injury, who is responsible – the AI developer, the company that deployed the system, or the end-user? Establishing clear legal principles and frameworks for attributing liability is crucial to ensuring that AI systems are held to the same standards of accountability as human decision-makers.

Another crucial aspect of the legal landscape is the protection of individual rights and privacy. As AI systems become more ubiquitous in our daily lives, collecting and processing vast amounts of personal data, the need to safeguard the privacy and autonomy of citizens becomes paramount. Legislation such as the General Data Protection Regulation (GDPR) in the European Union and the proposed AI Bill of Rights in the United States are examples of efforts to enshrine these principles in law.

The Human Factor in Ethical AI

Ultimately, the question of whether machines can judge right from wrong is not solely a technological one – it is a profoundly human one. As we grapple with the ethical implications of AI, we must recognize that the fundamental values and principles that guide our moral reasoning are rooted in our shared humanity.

The role of human experts, ethicists, and policymakers in shaping the development of ethical AI cannot be overstated. It is essential that we bring together diverse perspectives, including those from law, philosophy, psychology, and social sciences, to inform the design and deployment of these systems. By incorporating a deep understanding of human moral reasoning and ethical frameworks, we can strive to create AI systems that are more aligned with our shared values and capable of making nuanced, context-sensitive judgments.

Moreover, the human factor extends beyond the design and regulation of ethical AI – it also encompasses the critical role of human oversight and intervention. As advanced AI systems become more autonomous and capable of making high-stakes decisions, the need for human monitoring, interpretation, and ultimately, the ability to override the decisions of these systems becomes increasingly important. This delicate balance between human and machine judgment is a crucial aspect of ensuring the responsible and ethical development of AI.

The Future of AI and the Law: Towards Responsible Innovation

As we grapple with the complex interplay between law and AI, it is clear that the path forward requires a multifaceted approach. Collaboration between policymakers, legal experts, ethicists, and AI developers will be essential in crafting a regulatory framework that can effectively address the ethical challenges posed by these technologies.

One promising avenue is the development of ethical frameworks and guidelines that can be embedded into the design and deployment of AI systems. Organizations such as the IEEE, the OECD, and the European Union have put forth principles and frameworks for ethical AI, which encompass values such as transparency, accountability, fairness, and respect for human rights. By aligning the development of AI with these ethical principles, we can work towards creating systems that are more aligned with our societal values.

Additionally, the role of the legal system in shaping the future of ethical AI cannot be overstated. As lawmakers and courts grapple with the complex legal implications of these technologies, they will play a crucial role in setting precedents, defining liability, and ensuring that the fundamental rights and liberties of citizens are protected. The development of new legal doctrines and the adaptation of existing laws to the AI era will be essential in navigating this uncharted territory.

Ultimately, the pursuit of ethical AI is not just a technological challenge – it is a societal imperative. By fostering a culture of responsible innovation, where the development of AI is guided by a deep respect for human values and a commitment to the common good, we can work towards a future where machines and humans can coexist in a harmonious and mutually beneficial way. The quest to ensure that machines can judge right from wrong is not just a technical problem to be solved – it is a profound ethical and societal challenge that will shape the very fabric of our future.