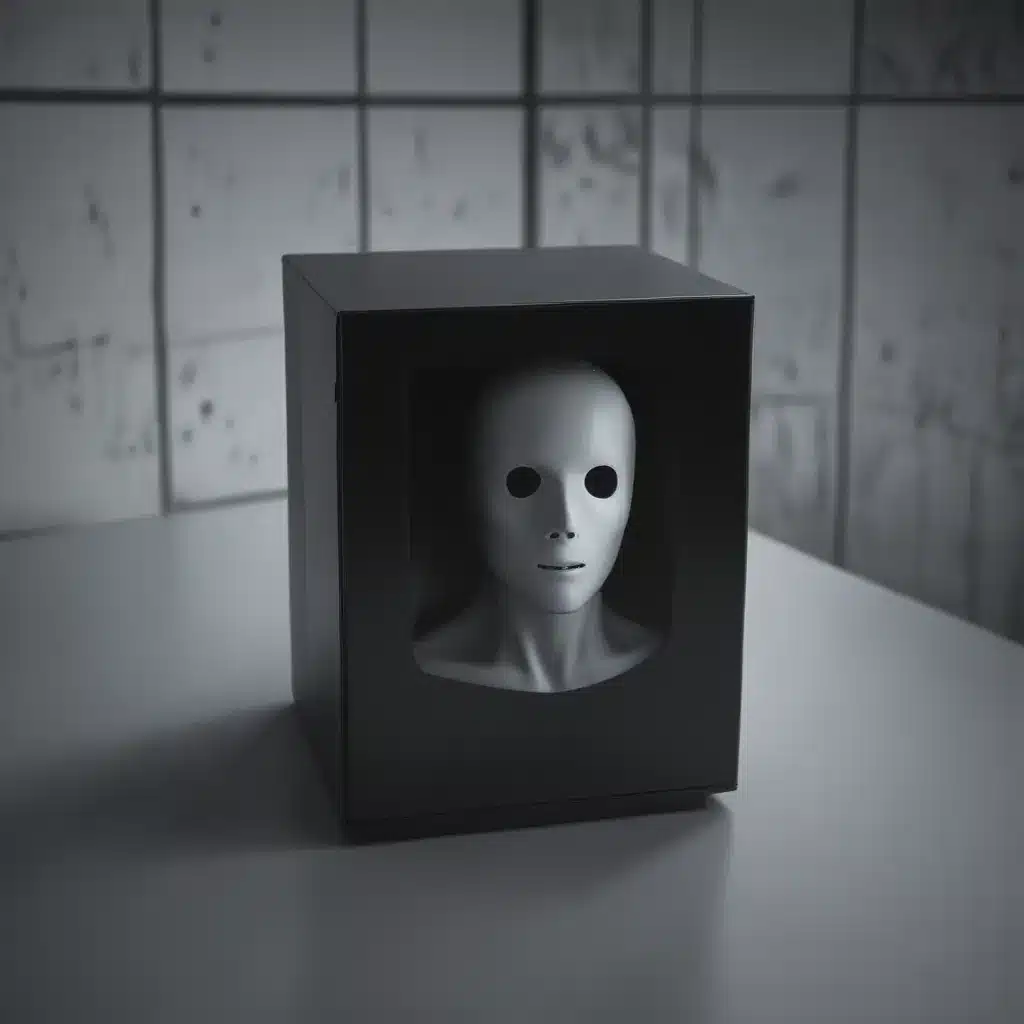

The Enigma of AI: Unraveling the Black Box

I have always been fascinated by the rapid advancements in artificial intelligence (AI) and the profound impact it has had on our lives. However, as these systems become increasingly sophisticated, a troubling phenomenon has emerged – the AI black box. This opaque nature of AI decision-making has become a significant concern, raising questions about transparency, accountability, and the potential for unintended consequences.

At the heart of the AI black box problem lies the complexity of modern machine learning algorithms. These algorithms, which form the backbone of many AI systems, operate in ways that are often difficult for humans to comprehend. The intricate relationships between the input data, the hidden layers of the neural networks, and the final outputs can be challenging to interpret, even for the experts who develop these systems.

This lack of transparency poses a significant challenge, as it can make it challenging to understand how an AI system arrived at a particular decision or prediction. Without this understanding, it becomes difficult to assess the fairness, reliability, and safety of these systems. This is especially concerning when AI is used in high-stakes decision-making, such as healthcare, finance, or criminal justice, where the consequences of errors or biases can be severe.

Peering into the Black Box: Approaches to Explainable AI

In response to this challenge, researchers and developers have been exploring various approaches to making AI systems more transparent and interpretable. These approaches, collectively known as Explainable AI (XAI), aim to provide users and stakeholders with a better understanding of how AI systems work and the rationale behind their decisions.

One such approach is the use of interpretable models, where the inner workings of the AI system are designed to be more easily understood by humans. This can involve using simpler algorithms, such as decision trees or linear regression, which can provide more intuitive explanations for their outputs. Additionally, techniques like feature importance analysis and sensitivity analysis can help identify the key factors influencing an AI system’s decisions.

Another approach to addressing the AI black box problem is the development of post-hoc explanation methods. These methods aim to provide insights into the inner workings of a complex AI system after it has been trained and deployed. Techniques such as saliency maps, which highlight the regions of an input that most strongly influenced the AI’s output, and example-based explanations, which provide similar past cases to illustrate the reasoning behind a decision, can help users better understand the system’s decision-making process.

The Human Element: Ethical Considerations in AI Transparency

As we strive to make AI systems more transparent and interpretable, it is essential to consider the ethical implications of these efforts. The desire for transparency must be balanced with the need to protect sensitive information, individual privacy, and intellectual property. Additionally, the choice of explanation methods can have significant consequences on how users perceive and trust the AI system.

For instance, if an AI system’s decision-making process is overly simplified or abstracted, it may give users a false sense of understanding, leading to overconfidence in the system’s capabilities. Conversely, if the explanations are too complex or technical, they may alienate users and undermine their trust in the system.

Furthermore, the issue of AI transparency is closely linked to the broader question of algorithmic bias and fairness. Without a clear understanding of how an AI system arrives at its decisions, it can be challenging to identify and mitigate biases that may be present in the data or the algorithms themselves. This is particularly important in applications where AI is used to make high-stakes decisions that can have significant impacts on individuals or communities.

Navigating the Regulatory Landscape: Governance and Accountability

As the AI black box problem has gained widespread attention, policymakers and regulatory bodies have begun to address the need for greater transparency and accountability in the development and deployment of AI systems. Several initiatives, both at the national and international level, have been proposed to address these concerns.

For example, the European Union’s General Data Protection Regulation (GDPR) includes provisions related to the “right to explanation,” which gives individuals the right to request an explanation of the logic behind decisions made by automated systems that significantly affect them. Similarly, the proposed AI Act in the EU aims to establish a comprehensive regulatory framework for AI, including requirements for transparency and risk management.

In the United States, the National Institute of Standards and Technology (NIST) has developed a framework for the responsible development and use of AI, which includes guidance on explainability and interpretability. Additionally, several states have introduced legislation to address algorithmic bias and transparency in AI systems used by government agencies.

The Path Forward: Collaborating for AI Transparency

As the AI black box problem continues to evolve, it will require a collaborative effort from a diverse range of stakeholders, including researchers, developers, policymakers, and the general public, to find sustainable solutions.

Researchers must continue to push the boundaries of Explainable AI, developing new techniques and methods that can provide meaningful insights into the decision-making processes of complex AI systems. At the same time, developers must prioritize transparency and interpretability in the design and deployment of AI-powered applications, working closely with domain experts and end-users to ensure that the explanations are both accurate and understandable.

Policymakers and regulatory bodies play a crucial role in establishing the necessary frameworks and guidelines to ensure that AI systems are held to high standards of transparency and accountability. By collaborating with industry and civil society, they can create a regulatory environment that promotes responsible AI development and deployment, while also protecting the rights and interests of individuals and communities.

Ultimately, the path to addressing the AI black box problem requires a multifaceted approach that balances the need for innovation and progress with the fundamental principles of transparency, fairness, and accountability. Only through this collaborative effort can we unlock the full potential of AI while ensuring that these powerful technologies serve the best interests of humanity.

Unraveling the Mysteries: Case Studies and Real-World Insights

To further illustrate the complexities and challenges associated with the AI black box problem, let’s explore a few real-world case studies and insights from industry experts.

Case Study: Algorithmic Bias in Hiring

One prominent example of the AI black box problem is the case of Amazon’s recruitment algorithm. In 2018, it was revealed that the company had been using an AI-powered system to assist in the hiring process, which had been systematically discriminating against female candidates. The algorithm had been trained on data that reflected historical biases in the industry, leading it to penalize resumes that contained the word “women” or attended all-female colleges.

This case highlights the importance of understanding the data and assumptions that underlie AI systems, as well as the need for rigorous testing and monitoring to identify and mitigate potential biases. As Samantha Kortum, a data scientist at a leading technology company, explains, “The AI black box problem is not just a technical challenge – it’s also a societal one. We have a responsibility to ensure that these systems are fair, transparent, and accountable, especially in high-stakes decision-making domains.”

Insights from an AI Ethics Expert

Dr. Jamal Nassar, a prominent AI ethics researcher, emphasizes the need for a multidisciplinary approach to addressing the AI black box problem. “It’s not enough to just focus on the technical aspects of explainability and interpretability,” he says. “We also need to consider the broader ethical, social, and legal implications of these systems. This requires collaboration between computer scientists, ethicists, policymakers, and the general public.”

Dr. Nassar highlights the importance of developing explainable AI systems that can provide meaningful and understandable explanations for their decisions, without sacrificing the performance and effectiveness of the algorithms. “It’s a delicate balance,” he explains. “We need to find ways to make these systems more transparent and accountable, while still preserving their ability to tackle complex, real-world problems.”

The Human-AI Collaboration Imperative

In a recent interview, Natalie Dill, the head of AI research at a leading technology company, stressed the importance of human-AI collaboration in addressing the black box problem. “AI systems are not going to replace human decision-making anytime soon,” she says. “Instead, we need to find ways to effectively integrate these technologies into our decision-making processes, with humans maintaining oversight and the ability to understand and challenge the AI’s outputs.”

Dill emphasizes the need for continuous dialogue and feedback between AI developers, domain experts, and end-users to ensure that the explanations provided by these systems are meaningful and useful. “It’s not just about making the AI more transparent,” she says. “It’s about creating a collaborative ecosystem where humans and machines can work together to arrive at the best decisions possible.”

Conclusion: Embracing Transparency, Fostering Trust

As we continue to witness the remarkable advancements in artificial intelligence, it is clear that the AI black box problem is a critical challenge that must be addressed. By embracing the principles of transparency and explainability, we can unlock the full potential of these powerful technologies while ensuring that they are deployed in a responsible and accountable manner.

Through collaborative efforts among researchers, developers, policymakers, and the public, we can work towards developing AI systems that are not only highly capable, but also transparent, fair, and aligned with our core values and societal goals. By doing so, we can build a future where the “ghost in the machine” is no longer a source of mystery and concern, but a trusted partner in our journey towards a better world.