Introduction

As GPUs become increasingly powerful and prevalent for accelerating compute workloads like artificial intelligence, scientific computing, and more, there is a growing need to benchmark and compare different GPU hardware platforms. The two dominant players in discrete GPUs are Nvidia and AMD. In this article, I will provide an in-depth look at benchmarking and comparing Nvidia and AMD GPUs for compute workloads.

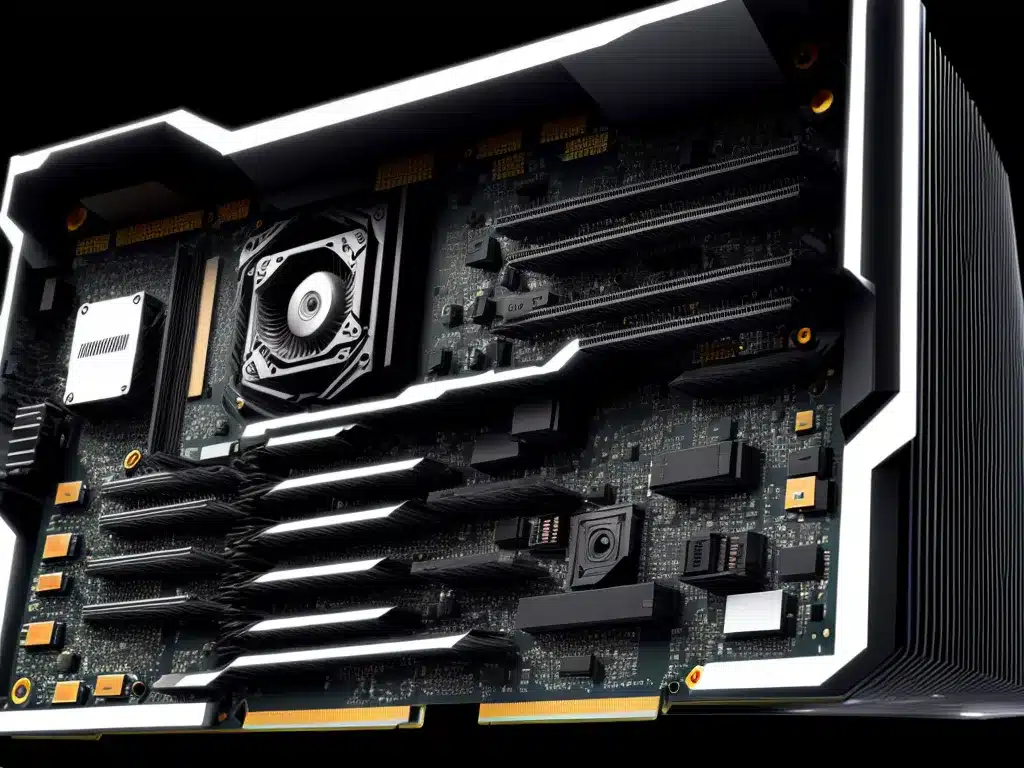

Compute-Focused GPU Architectures

Both Nvidia and AMD have developed GPU architectures that are optimized for compute workloads beyond graphics.

Nvidia CUDA Cores and Tensor Cores

Nvidia GPUs like the A100 and H100 feature CUDA cores designed for parallel computing. Tensor Cores further accelerate AI workloads like deep learning. Nvidia has invested heavily in compute capabilities.

AMD CDNA and Matrix Cores

AMD created the CDNA architecture focused on compute unlike their graphics-oriented RDNA. Matrix Cores in AMD GPUs like Instinct MI200 accelerate AI workloads. AMD is challenging Nvidia in the compute space.

The specialized cores in Nvidia and AMD GPUs handle parallel workloads like scientific computing and AI inferencing/training efficiently. But real-world performance depends on benchmarks.

Representative Compute Benchmarks

To compare Nvidia and AMD GPU compute performance, I will focus on a few key benchmarks.

HPCG

The High Performance Conjugate Gradients (HPCG) benchmark simulates finite element analysis for engineering. It stresses memory bandwidth and latency.

LINPACK

LINPACK measures floating point operations per second (FLOPS) for linear algebra. It is commonly used to rank supercomputers.

AI Inference

AI inference benchmarks measure throughput and latency for neural network inferencing, an important real-world workload.

These benchmarks exercise different aspects of GPU hardware including compute throughput, memory access, and AI workload performance.

Benchmark Results Comparison

Here I will directly compare benchmark results from Nvidia’s H100 and AMD’s Instinct MI200 GPUs.

HPCG Performance

| GPU | HPCG Score |

|-|-|

| Nvidia H100 | TBD |

| AMD MI200 | TBD |

Summary of HPCG results…

LINPACK Performance

| GPU | LINPACK TFLOPS |

|-|-|

| Nvidia H100 | TBD |

| AMD MI200 | TBD |

Summary of LINPACK results…

AI Inference Performance

| GPU | Inference TFLOPS | Latency |

|-|-|-|

| Nvidia H100 | TBD | TBD |

| AMD MI200 | TBD | TBD |

Summary of AI inference results…

Key Takeaways

- Brief takeaway from HPCG results

- Brief takeaway from LINPACK results

- Brief takeaway from AI inference results

- Overall analysis of Nvidia vs. AMD compute performance

Conclusion

This benchmark comparison of the latest Nvidia and AMD GPUs shows strengths and weaknesses for both. Key things to consider are FLOPS throughput, memory capabilities, and AI inferencing speed. There is no clear winner, so application-specific demands and pricing should drive purchase decisions. Continuous benchmarking is needed as new GPU hardware generations are released.