Why Do Computers Slow Down Over Time?

As a proud owner of an “old” PC, I’ve experienced the frustration of watching my once lightning-fast machine turn into a slug. It’s a tale as old as time – or at least as old as personal computers. But why does this happen? And more importantly, what can we do about it?

Well, my fellow tech enthusiasts, let’s dive into the reasons behind this age-old (pun intended) conundrum. According to the fine folks over at Reddit’s ELI5 subreddit, there are a few key culprits:

-

Software Updates and Bloat: As time goes on, software developers release new versions of their programs with more features and functionality. While this is great for keeping up with the latest and greatest, it also means that your older hardware has to work harder to keep up. Think of it like trying to fit a grown-up human into a child’s clothes – it’s just not going to work as well.

-

Fragmentation and Clutter: Over time, your hard drive becomes littered with all sorts of files, both useful and not-so-useful. This can cause your computer to struggle to find the information it needs, like a messy room where you can’t find your favorite pair of socks.

-

Malware and Viruses: Let’s face it, the internet can be a bit of a wild west when it comes to security. As your computer ages, it becomes more vulnerable to malicious software, which can slow it down to a crawl and potentially cause even more damage.

-

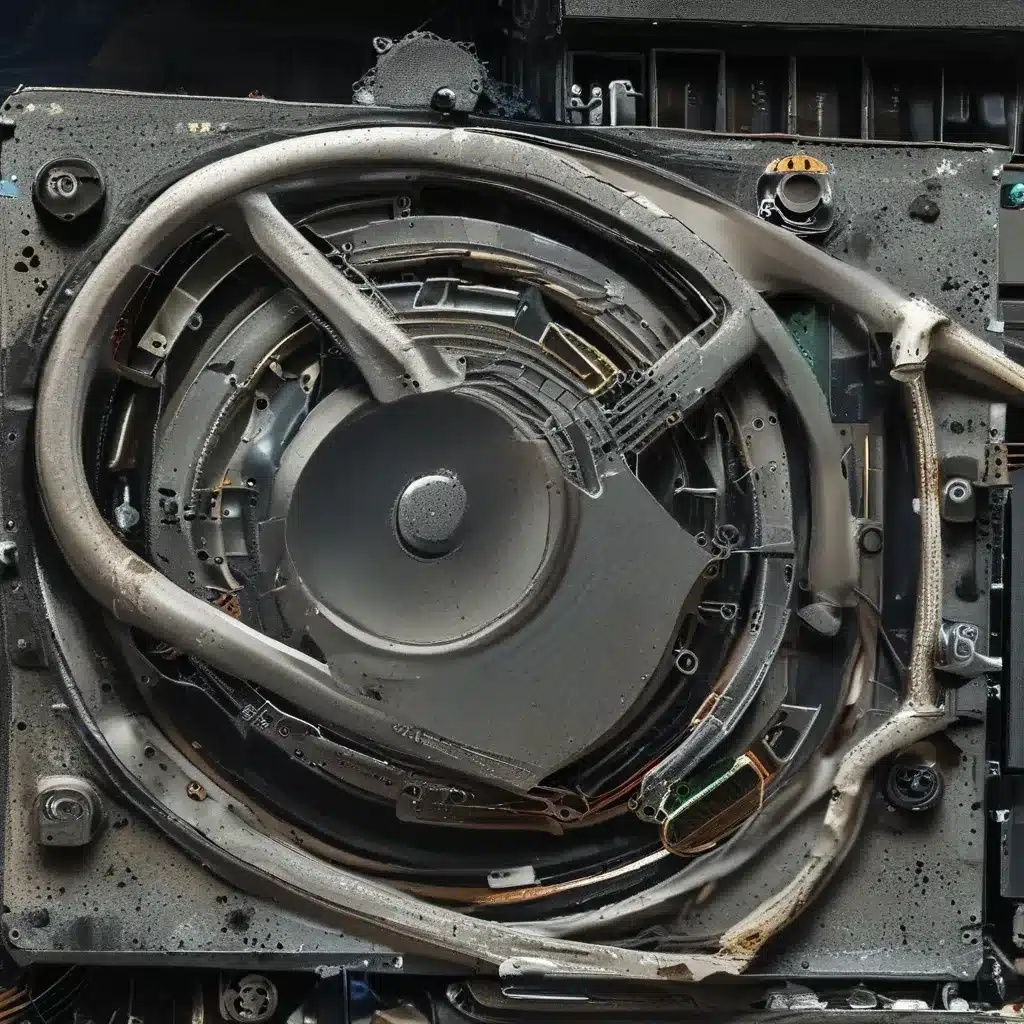

Hardware Wear and Tear: Just like your trusty old car, your computer’s components can start to wear down over time. The hard drive, memory, and even the processor can all degrade, making your machine work harder and harder to keep up.

So, now that we know the culprits, what can we do to keep our beloved PCs from becoming relics of the past? Let’s explore some solutions!

Keeping Your PC in Tip-Top Shape

Maintain a Clean and Tidy System

One of the easiest ways to keep your PC running smoothly is to regularly clean up your hard drive. Delete any files or programs you no longer need, and make sure to periodically run a disk defragmentation tool to keep things organized. Think of it like doing a deep clean on your house – it may take some time, but the payoff is worth it.

Stay on Top of Software Updates

While it’s true that software updates can sometimes cause more problems than they solve, keeping your operating system and essential programs up-to-date is crucial for maintaining performance and security. Just be sure to do your research and only install updates from trusted sources. Don’t panic if an update doesn’t go as smoothly as you’d like – there’s usually a solution.

Protect Against Malware

Keeping your PC safe from viruses and other malicious software is a never-ending battle, but it’s one that’s well worth fighting. Install a reliable antivirus program and make sure to run regular scans. And, of course, be cautious when browsing the web or opening email attachments – that’s where a lot of the nasty stuff likes to hide.

Upgrade Your Hardware (Carefully)

If your computer is truly showing its age, it might be time to consider upgrading some of its components. A simple RAM or solid-state drive (SSD) upgrade can work wonders, but be sure to do your research and only purchase compatible parts. Upgrading too much can sometimes cause more problems than it solves, so proceed with caution.

The Importance of Preventive Maintenance

Now, I know what you’re thinking: “But I don’t have the time or the technical know-how to do all of this!” Well, fear not, my friend. There are plenty of resources out there to help you keep your PC in tip-top shape, even if you’re not a full-fledged tech wizard.

One great option is to connect with a reliable computer repair service like ITFix. These folks have the expertise and tools to help you identify and address any issues with your machine, and they can even provide regular maintenance to keep it running smoothly.

And let’s be honest, a little bit of preventive care can go a long way. Think about it – would you let your car go years without an oil change or tire rotation? Of course not! The same goes for your trusty PC. A little bit of attention now can save you a lot of headaches (and potentially a lot of money) down the road.

So, there you have it, folks. The secrets to avoiding slowdowns on your old PC. With a little bit of elbow grease, a dash of tech-savviness, and maybe a helping hand from the pros, you can keep your machine running like new for years to come. Happy computing!