The Robot Surgeon Dilemma

Cast your mind back to December 2018. Hundreds of top computer scientists, financial engineers, and executives crammed themselves into a room at the annual Neural Information Processing Systems (NeurIPS) conference in Montreal. They were there to hear the results of the Explainable Machine Learning Challenge – a prestigious competition that reflected a growing need to make sense of the outcomes calculated by the black box models dominating machine learning-based decision making.

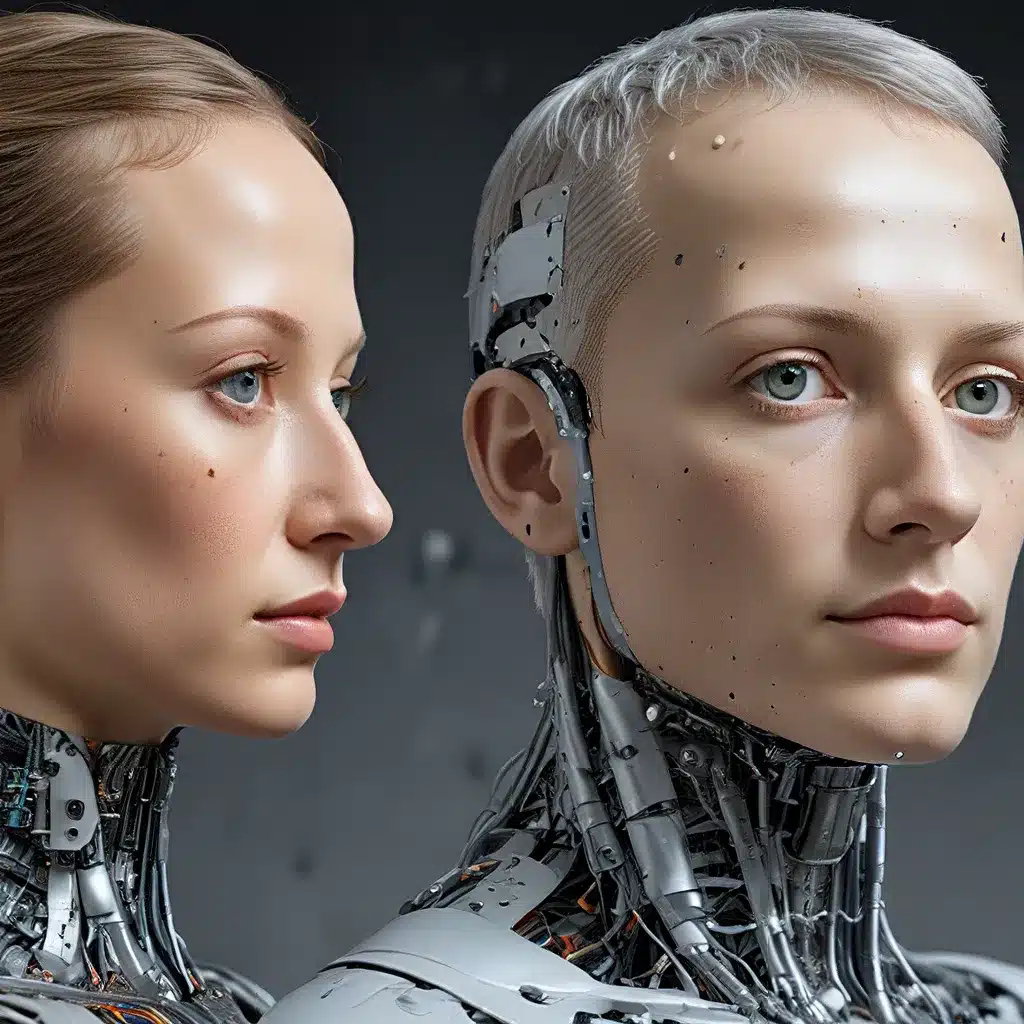

The audience was presented with a thought experiment. Imagine you had cancer and needed surgery to remove a tumor. Two images were displayed on the screen. One showed a human surgeon who could explain anything about the surgery but had a 15% chance of causing death during the operation. The other showed a robotic arm that could perform the surgery with only a 2% chance of failure.

The catch? The robot was a black box – total trust was required, with no ability to ask questions or understand how it came to its decisions. The audience was then asked to vote on which they would prefer to perform the life-saving surgery. Unsurprisingly, all but one hand went up for the robot.

The takeaway was clear: when faced with a high-stakes decision, we’re often willing to trust a mysterious, opaque algorithm over a transparent, explicable human – as long as the numbers look better on paper. But does accuracy really have to come at the cost of interpretability? This false dichotomy is at the heart of a much bigger problem plaguing the world of artificial intelligence.

The Rise of the Black Box

Over the past few years, the rapid advances in deep learning for computer vision have led to a widespread belief that the most accurate models for any given data science problem must be inherently uninterpretable and complicated. This belief stems from the historical use of machine learning in low-stakes decisions like online advertising and web search, where individual choices don’t deeply affect human lives.

In these domains, black box models – which are created directly from data by an algorithm, meaning humans can’t understand how variables are being combined to make predictions – have proven enormously successful. But as machine learning has expanded into high-stakes realms like healthcare, criminal justice, and finance, this ‘black box’ approach has become increasingly problematic.

Upol Ehsan, a researcher at the Georgia Institute of Technology, has firsthand experience with this issue. He once took a test ride in an Uber self-driving car, where anxious passengers were encouraged to watch a ‘pacifier screen’ showing the car’s view of the road, with hazards picked out in orange and red. “Don’t get freaked out – this is why the car is doing what it’s doing,” the screen seemed to say. But for Ehsan, the alien-looking street scene only highlighted the strangeness of the experience, rather than reassuring him.

What if the self-driving car could really explain itself? This question gets to the heart of the interpretability and explainability challenge facing artificial intelligence.

The Interpretability vs. Explainability Debate

In the world of AI and machine learning, interpretability and explainability are often used interchangeably, but there are some key differences between the two concepts.

Interpretability refers to the ability to understand how a model works – the specific logic and reasoning it uses to arrive at a decision. An interpretable model is one where you can trace the step-by-step process that led to the output.

Explainability, on the other hand, is about providing a high-level understanding of a model’s behavior. An explainable model can give you a general sense of how it operates and what factors it considers, even if you can’t fully dissect the inner workings.

Both interpretability and explainability are important for ensuring the reliability, transparency, and accountability of AI systems, especially in high-stakes domains. Understanding how a model works can help identify biases, errors, and areas for improvement, while also instilling greater user confidence and trust.

However, as the Uber self-driving car example illustrates, providing explanations doesn’t always lead to greater understanding. The success of deep learning has been largely driven by the ability to tinker and adapt neural networks to achieve better practical results, often at the expense of theoretical comprehension. This has left us with a proliferation of black box models that are incredibly powerful but utterly opaque.

Challenging the Interpretability-Accuracy Trade-Off

One of the key beliefs underpinning the rise of black box models is the notion of an interpretability-accuracy trade-off – the idea that in order to achieve the highest possible predictive performance, you have to sacrifice interpretability and accept a degree of model opacity.

But research has shown that this trade-off is often a false dichotomy. In many domains, such as criminal justice risk prediction and healthcare, interpretable models can perform just as well as their black box counterparts – without sacrificing accuracy.

The story of the 2018 Explainable Machine Learning Challenge provides a fascinating case study. The organizers had provided a dataset of home equity line of credit (HELOC) applications, and the goal was to create a black box model for predicting loan default, then explain how it worked.

However, when the Duke University team (of which I was a part) received the data, they realized that no matter whether they used a deep neural network or classical statistical techniques, there was less than a 1% difference in accuracy between the methods. Even the most interpretable models could match the performance of the best black box.

Rather than submitting a black box model just for the sake of explaining it, the Duke team decided to create a fully interpretable model that they believed even a banking customer with little mathematical background could understand. The model was decomposable into different mini-models, each of which could be understood on its own, and they also created an interactive online visualization tool to help people explore the credit factors.

While this approach didn’t win the competition (the judges weren’t permitted to interact with the visualization), the Duke team’s entry did earn the FICO Recognition Award, as FICO’s own evaluation found it to be a strong performer. More importantly, the team had made a bold statement: in many cases, there’s no need to resort to black box models when interpretable alternatives can achieve the same level of accuracy.

Rethinking the Role of Explanations

The interpretability-accuracy trade-off is a powerful narrative that has influenced everything from the development of machine learning models to the public’s perception of AI. But it’s a narrative that’s deeply flawed – and one that has enabled the proliferation of opaque, high-stakes decision-making systems with profound real-world consequences.

As Upol Ehsan and his colleague Mark Riedl have discovered, the role of explanations in AI systems is more nuanced than simply ‘making the model understandable.’ Sometimes, the goal shouldn’t be to have the user agree with the AI’s decision, but rather to provoke reflection and critical thinking.

Ehsan and Riedl have developed a prototype system that enables a neural network playing the classic video game Frogger to provide natural language rationales for its actions, such as “I’m moving left to stay behind the blue truck.” The aim isn’t necessarily to have the user fully comprehend the model’s inner workings, but rather to foster a deeper understanding of how the AI is perceiving and reasoning about the game environment.

Explanations, whether they’re simple or complex, can serve as a window into the model’s decision-making process – even if the user doesn’t fully understand the underlying mechanics. This can be invaluable for building trust, identifying potential biases or errors, and ensuring the appropriate use of AI systems in high-stakes domains.

The Future of Interpretable AI

As AI continues to expand into ever more consequential realms, the need for interpretable and explainable models has never been greater. From healthcare diagnostics to criminal sentencing, the decisions made by these systems can have profound impacts on people’s lives. Ensuring transparency and accountability is crucial, both for ethical and legal reasons.

But as the research on visualization tools has shown, simply providing explanations isn’t enough – we need to design interpretable AI systems with the end-user in mind, tailoring the level of detail and the mode of presentation to their specific needs and capabilities.

IT service providers like ITFix have a crucial role to play in this regard, working closely with their clients to understand their unique requirements and developing AI-powered solutions that are not only accurate, but also intuitive, transparent, and trustworthy.

The future of AI is not a binary choice between black box models and overly simplistic ‘glass box’ alternatives. It’s about striking the right balance between predictive power and interpretability, leveraging the strengths of both to create AI systems that are truly responsive to the needs of the humans they serve. As the Explainable Machine Learning Challenge has shown, when we approach the challenge with creativity and an open mind, the possibilities are endless.

So the next time you’re faced with a high-stakes decision involving an AI system, don’t just accept the numbers at face value. Dig deeper, ask questions, and demand explanations that you can truly understand. Only then can you be confident that the AI is working for you, not against you.