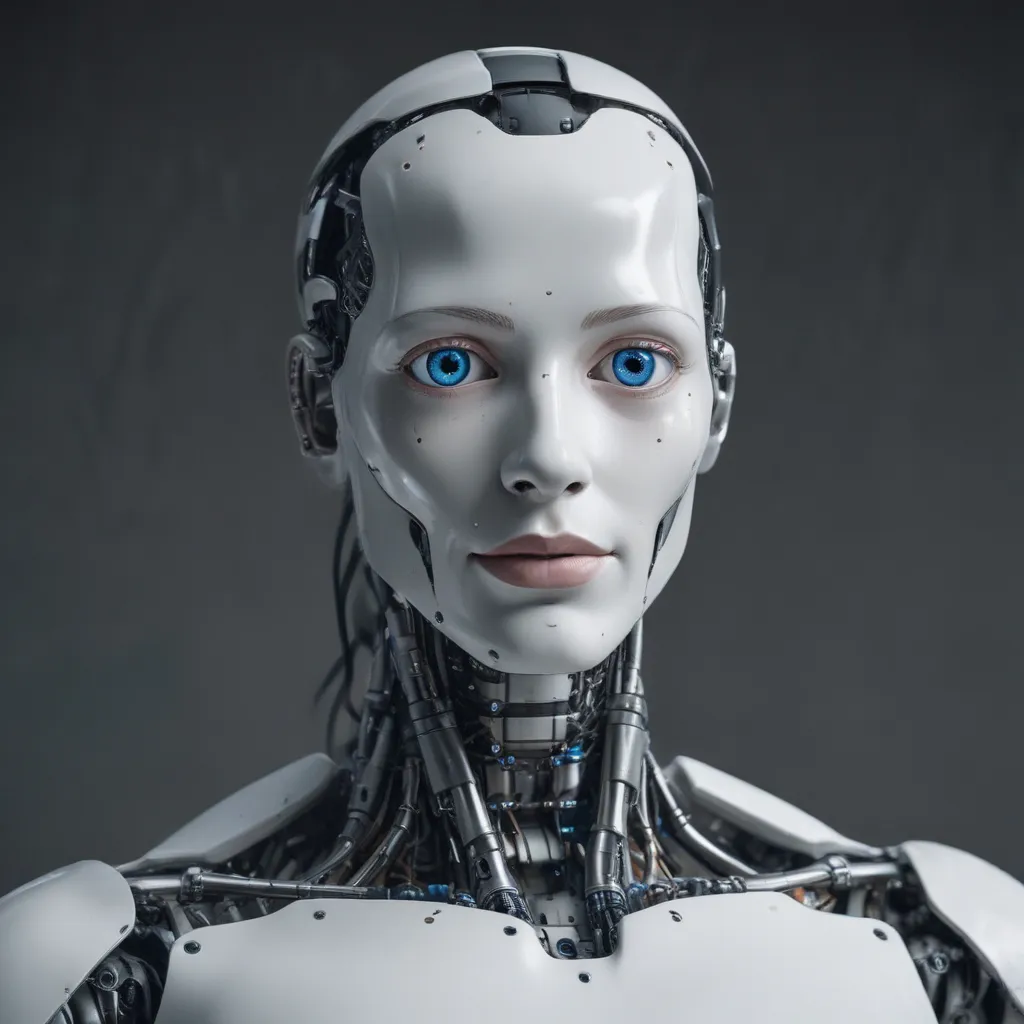

The Rise of Intelligent Machines

I have witnessed the rapid advancements in artificial intelligence (AI) over the past decade, and I must say, the implications for our society are profound. As these intelligent machines become more sophisticated, capable of making complex decisions, and integrated into our daily lives, the question of how we instill ethical principles within them becomes increasingly crucial. The notion of “teaching morality to machines” is a captivating and perplexing challenge that deserves our utmost attention.

Machines, once considered mere tools to serve human needs, are now exhibiting remarkable capabilities that transcend their initial design. They can process vast amounts of data, identify patterns, and make judgments that rival or even surpass those of their human counterparts. This newfound autonomy raises pressing concerns about the ethical framework that should guide their decision-making processes. After all, the choices made by these intelligent systems can have far-reaching consequences, affecting individuals, communities, and even the fabric of our society.

Defining AI Ethics

At the heart of this dilemma lies the concept of AI ethics, which seeks to ensure that the development and deployment of AI systems are aligned with moral and ethical principles. This multifaceted field encompasses a wide range of considerations, from privacy and data protection to fairness, transparency, and accountability. Philosophers, computer scientists, and policymakers have been grappling with the complexities of this challenge, as they strive to establish a coherent and robust ethical framework for AI.

One of the fundamental questions that AI ethics seeks to address is: How do we instill moral values and ethical decision-making in machines? This is no easy task, as the very nature of human morality is often nuanced, contextual, and subject to ongoing debate. Translating these abstract concepts into tangible algorithms and design principles for AI systems is a formidable undertaking.

Approaches to Teaching Morality to Machines

Researchers and ethicists have explored various approaches to imbuing AI systems with ethical principles. One prominent strategy involves the incorporation of moral frameworks, such as utilitarianism or deontology, into the algorithms that govern the decision-making processes of these machines. By aligning the objectives and constraints of AI systems with established ethical theories, we aim to ensure that their actions and outputs adhere to moral guidelines.

Another approach focuses on the concept of “value alignment,” which seeks to align the goals and preferences of AI systems with those of humans. This involves instilling in the machines a deep understanding of human values, social norms, and the broader context in which they operate. By cultivating a shared sense of purpose and ethical responsibility, we hope to create AI systems that can navigate complex moral dilemmas with nuance and empathy.

Challenges and Limitations

However, the task of teaching morality to machines is fraught with challenges and limitations. Ethical decision-making often relies on subtle contextual cues, emotional intelligence, and the ability to consider abstract moral principles – qualities that are notoriously difficult to replicate in artificial systems. Additionally, the inherent biases and uncertainties present in training data and machine learning algorithms can lead to unintended ethical consequences, further complicating the process of instilling moral values in AI.

Another significant challenge lies in the potential for AI systems to exhibit emergent behaviors that were not anticipated or accounted for during their development. As these machines become more autonomous and self-learning, they may develop decision-making patterns that diverge from the ethical frameworks we’ve attempted to establish. Navigating these unanticipated scenarios requires a deep understanding of the complex relationship between AI, ethics, and the dynamic nature of human society.

Case Studies and Real-World Implications

To illustrate the practical implications of AI ethics, let’s consider a few real-world case studies. One such example is the use of AI-powered facial recognition systems in law enforcement and surveillance. While these technologies can assist in crime prevention and investigation, they also raise concerns about privacy, bias, and the potential for misuse. Ensuring that such systems are designed and deployed with robust ethical safeguards is crucial to mitigating the risks and upholding the principles of justice and individual rights.

Another case study involves the use of AI in medical decision-making. As AI algorithms are increasingly integrated into healthcare systems, they are tasked with making diagnostic and treatment recommendations. Ensuring that these decisions are aligned with ethical principles, such as beneficence, non-maleficence, and respect for patient autonomy, is a significant challenge that requires collaboration between medical professionals, ethicists, and AI developers.

The Role of Regulation and Governance

Addressing the challenges of AI ethics cannot be the sole responsibility of technologists and researchers. It requires a coordinated effort involving policymakers, regulatory bodies, and the broader public. The development of robust governance frameworks and legal regulations is essential to ensure that the deployment of AI systems is aligned with societal values and ethical principles.

Governments and international organizations have begun to take steps in this direction, such as the European Union’s proposed AI Act, which aims to establish a comprehensive regulatory framework for the development and use of AI. These efforts, while still evolving, highlight the growing recognition of the need for a comprehensive and collaborative approach to AI ethics.

The Importance of Transparency and Accountability

Equally crucial to the success of AI ethics is the principle of transparency and accountability. AI systems must be designed and deployed in a manner that allows for scrutiny, auditing, and the ability to understand the decision-making processes that underlie their actions. This not only fosters public trust but also enables the identification and mitigation of potential ethical risks.

Transparency and accountability also extend to the organizations and individuals responsible for the development and deployment of AI systems. These stakeholders must be held accountable for the ethical consequences of their decisions and actions, and must be willing to engage in open dialogue with the public, policymakers, and other relevant parties.

The Evolving Landscape of AI Ethics

As the field of AI continues to evolve, the landscape of AI ethics is also dynamic and constantly changing. Emerging technologies, such as generative AI, quantum computing, and brain-computer interfaces, present new ethical quandaries that will require innovative approaches and ongoing collaboration between various disciplines.

Moreover, the global nature of AI development and deployment necessitates a collaborative and international effort to establish consistent ethical standards and best practices. Fostering cross-cultural dialogue and sharing of knowledge and experiences will be crucial in ensuring that the ethical principles guiding AI systems are truly universal and responsive to the diverse needs and values of our global community.

The Human Factor in AI Ethics

Ultimately, the success of AI ethics lies in our ability to forge a strong and symbiotic relationship between humans and machines. While we strive to imbue AI systems with ethical principles, we must also recognize the inherent limitations of these artificial constructs and the irreplaceable role of human judgment, empathy, and moral reasoning.

The development of AI ethics must be a collective endeavor, involving not only technical experts but also ethicists, policymakers, and the broader public. By fostering a culture of collaboration, transparency, and shared responsibility, we can navigate the complex landscape of AI ethics and ensure that the integration of intelligent machines into our society is guided by the highest moral and ethical standards.

Conclusion: Embracing the Challenge of AI Ethics

As we stand at the crossroads of technological advancement and ethical considerations, the challenge of teaching morality to machines presents an opportunity for us to redefine the relationship between humanity and artificial intelligence. By confronting this challenge head-on, we can shape the future of AI in a way that aligns with our deepest held values and aspirations, ultimately creating a world where intelligent machines and human beings coexist in harmony, guided by a shared sense of ethical responsibility.

The road ahead may be filled with uncertainties and complexities, but by embracing the principles of AI ethics, we can ensure that the transformative power of AI is harnessed for the betterment of humanity. Let us continue to explore, debate, and innovate, always keeping the ethical implications of our actions at the forefront of our minds. Together, we can navigate this uncharted territory and forge a future where the moral and the digital coexist in perfect balance.