The Enigma of Artificial Intelligence

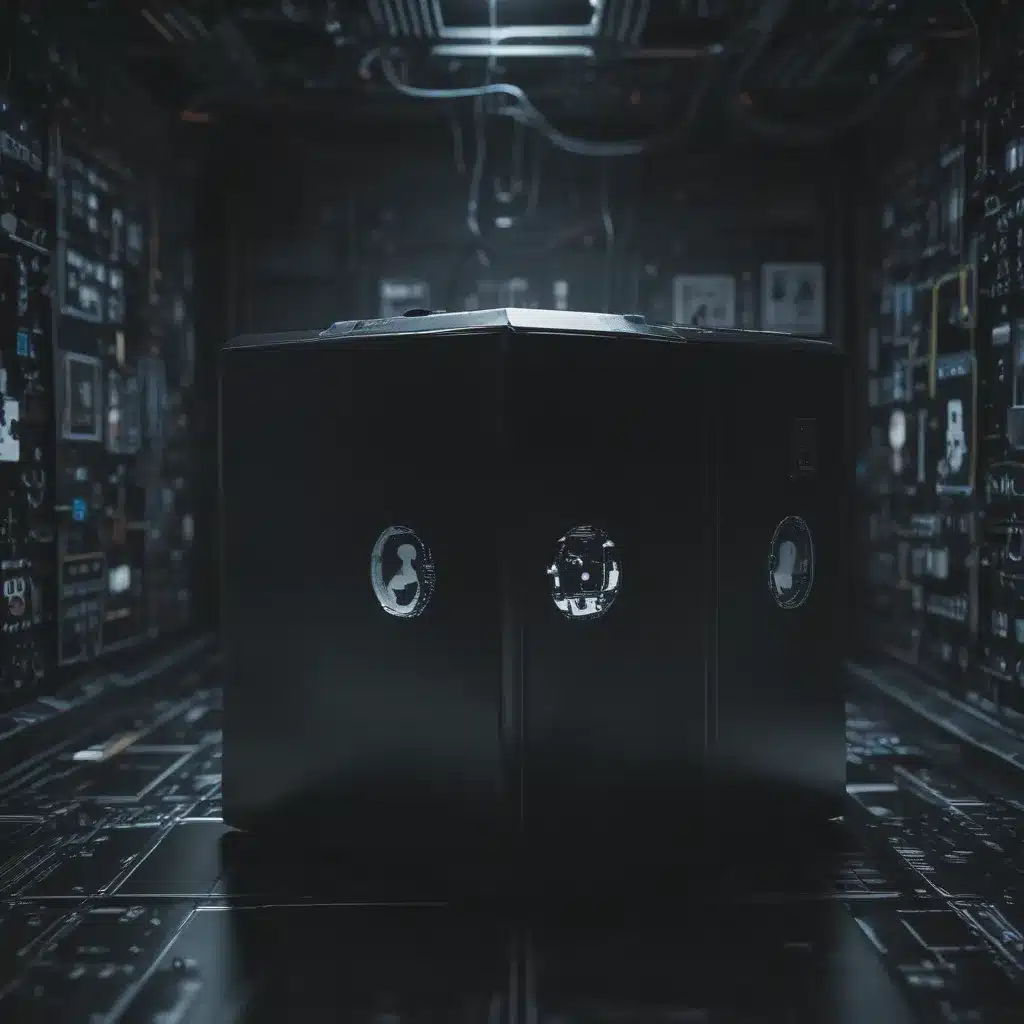

I have been fascinated by the rapid advancements in artificial intelligence (AI) technology, and the accompanying rise of the “black box” phenomenon. As an AI enthusiast, I’ve always been intrigued by the inner workings of these intelligent systems – how they process information, make decisions, and generate outputs. However, the opaque nature of many AI models has often left me, and many others, wondering: what is really going on inside the machine?

This question of the “black box” in AI is not merely an academic curiosity – it has real-world implications that we must grapple with. As AI becomes increasingly integrated into our daily lives, from personalized recommendations to high-stakes decision-making, understanding the logic and reasoning behind these systems is crucial. After all, how can we trust, regulate, and ethically deploy AI if we don’t fully comprehend its mechanisms?

In this in-depth exploration, I aim to shed light on the enigma of the AI black box. I will delve into the technical aspects of how these intelligent systems operate, the challenges of transparency and interpretability, and the broader societal implications. By the end, I hope to provide readers with a comprehensive understanding of this critical issue in the field of artificial intelligence.

Unveiling the AI Black Box: A Technical Perspective

At the heart of the AI black box conundrum lies the complexity of modern machine learning (ML) algorithms. Unlike traditional, rule-based programming, where the logic and decision-making process is clearly defined, many contemporary AI systems employ sophisticated neural networks and deep learning architectures that can learn patterns from vast amounts of data.

This data-driven approach to AI development has undoubtedly led to remarkable breakthroughs in areas such as computer vision, natural language processing, and predictive analytics. However, the very nature of these neural networks, with their multilayered structure and millions of interconnected parameters, can make them incredibly difficult to interpret and explain.

To illustrate this, let’s consider a commonly used deep learning model, the convolutional neural network (CNN). These AI systems are particularly adept at image recognition tasks, but understanding how they arrive at their classifications can be a daunting challenge. The CNN’s hidden layers, which transform raw pixel data into increasingly abstract representations, operate as a black box, with the intermediate steps largely opaque to human observers.

Similarly, in the realm of natural language processing, the transformer models that power groundbreaking language models like GPT-3 are notoriously complex, with their self-attention mechanisms and contextual understanding defying straightforward explanation. Even experts in the field struggle to fully comprehend the intricate workings of these AI systems.

This lack of transparency is not merely a technical nuisance – it can have serious implications for the deployment of AI in high-stakes domains. How can we trust an AI system to make critical decisions in healthcare, finance, or criminal justice if we cannot understand its reasoning? The black box problem poses a significant challenge to the ethical and accountable use of artificial intelligence.

Peering into the Black Box: Techniques for Achieving Interpretability

Recognizing the importance of addressing the AI black box, researchers and practitioners have developed various techniques to enhance the interpretability and explainability of these intelligent systems. These approaches aim to shed light on the internal decision-making processes, making AI more transparent and trustworthy.

One promising approach is the use of explainable AI (XAI) methods, which seek to provide insights into how an AI model arrived at a particular output. These techniques can range from simple feature importance analyses, which identify the most influential input variables, to more advanced techniques like layer visualization and attention maps, which delve deeper into the neural network’s inner workings.

For instance, in the case of image recognition, XAI methods can generate heatmaps that highlight the specific regions of an image that the AI system found most salient in its classification decision. Similarly, in natural language processing, attention visualization can reveal which parts of the input text the model focused on when generating its output.

Another approach to improving AI interpretability is the development of inherently interpretable models, such as decision trees and rule-based systems. These models, while potentially less powerful than their black box counterparts, offer a more transparent and explainable decision-making process, making it easier for humans to understand and validate their outputs.

Furthermore, the field of causal reasoning in AI has gained traction, with researchers exploring ways to build AI systems that can not only make predictions but also provide insights into the underlying causal relationships in the data. By understanding the causal mechanisms behind an AI’s decisions, we can better assess its reliability and trustworthiness.

It’s important to note that while these techniques can greatly enhance the interpretability of AI systems, they do not always provide a complete solution to the black box problem. The complexity of modern AI models can still present challenges, and there may be inherent trade-offs between model performance and interpretability.

Ethical Implications and the Societal Impact of the AI Black Box

The opaque nature of AI black boxes extends beyond the technical realm, posing significant ethical and societal challenges. As these intelligent systems become increasingly pervasive in our lives, the need to understand their decision-making processes becomes paramount.

One of the primary concerns surrounding the AI black box is the potential for bias and discrimination. If the inner workings of an AI system are not transparent, it becomes difficult to identify and mitigate biases that may be baked into the algorithm or the data it was trained on. This can lead to unfair and discriminatory outcomes, particularly in high-stakes domains like employment, lending, and criminal justice.

Moreover, the lack of interpretability in AI decision-making can undermine the principles of accountability and human oversight. Without the ability to comprehend how an AI system arrived at a particular conclusion, it becomes challenging to hold the system, or its developers, responsible for its actions. This can have profound implications for liability, legal recourse, and the overall trust in these intelligent technologies.

The AI black box also raises concerns about informed consent and individual autonomy. When AI systems are used to make decisions that significantly impact people’s lives, such as in healthcare or financial services, the lack of transparency can deprive individuals of the ability to understand and challenge the decisions that affect them.

Beyond the immediate ethical implications, the AI black box also has broader societal consequences. The opaque nature of these intelligent systems can undermine public trust in AI, hindering its widespread adoption and acceptance. This, in turn, can slow down the potential benefits and societal transformations that AI can bring, such as improved healthcare outcomes, more efficient resource allocation, and enhanced scientific discoveries.

To address these ethical and societal challenges, there is a growing emphasis on the importance of AI governance, regulatory frameworks, and ethical guidelines that prioritize transparency, accountability, and human oversight in the development and deployment of AI technologies.

Embracing Transparency: The Path Forward for AI

As we navigate the complexities of the AI black box, it’s clear that the path forward requires a multifaceted approach, combining technical advancements, ethical considerations, and collaborative efforts.

On the technical front, the continued development of interpretable and explainable AI models, as well as the refinement of causal reasoning techniques, will be crucial in shedding light on the inner workings of these intelligent systems. By empowering users and stakeholders to understand the decision-making processes, we can foster greater trust and informed decision-making.

Alongside the technical progress, the ethical and societal implications of the AI black box must remain at the forefront of our conversations. Robust governance frameworks, clear regulatory guidelines, and collaborative efforts between policymakers, industry leaders, and the public can help ensure that the deployment of AI aligns with fundamental human values and principles.

Ultimately, the goal is to create a future where AI is not a mysterious “black box,” but a transparent and trustworthy technology that enhances and empowers human decision-making. By embracing this transparency, we can unlock the full potential of artificial intelligence while upholding the ethical and societal responsibilities that come with it.

As we continue to navigate this challenging landscape, I remain hopeful that the collective efforts of researchers, practitioners, and policymakers will lead to a world where the “ghost in the machine” is no longer a source of mystery, but a well-understood and trusted partner in our collective pursuit of progress.